The COPPER project aims to develop new concepts in the context of alternative, advanced training strategies for deep neural networks applied in the field of assisted and autonomous driving. In particular, we will conduct basic research in the area of continuous learning, self-supervised and unsupervised learning, and new approaches for direct supervision by human behavior. With these technological advances, we aim to enable more adaptive models in the context of self-driving vehicles that require fewer resources for training and are suitable for a wider range of use cases. The project is working towards a more complete visual perception of different traffic situations by considering multiple sensors and tasks simultaneously.

SSMP Team: Spatial Sensing and Machine Perception

Team SSMP (Spatial Sensing and Machine Perception) focuses on the use of diverse 3D/2D sensing modalities (RGB/Stereo/ToF cameras, Radar, Lidar) to address challenging scene perception problems using Machine Learning/Deep Learning and traditional geometric Computer Vision. Such problems include as Semantic Scene Reconstruction, 6DoF Object Pose Estimation, Unsupervised Anomaly Detection, SLAM, Deep Sensor Fusion. Indicative application areas are Smart building sensing, AI in construction, Autonomous Driving, Industrial Robotics and Augmented Reality.

Projects of SSMP Team

COPPER

Continual Optical Perception for Dynamic Environments in and around of Vehicles

Contact

Dr.-Ing. Jason Raphael Rambach

- Jason_Raphael.Rambach@dfki.de

- Phone: +49 631 20575 3740

- Rene.Schuster@dfki.de

- Phone: +49 631 20575 3605

BERTHA

BEhavioural Replication of Human drivers for CCAM

The Horizon Europe project BERTHA kicked off on November 22nd-24th in Valencia, Spain. The project has been granted €7,981,799.50 from the European Commission to develop a Driver Behavioral Model (DBM) that can be used in connected autonomous vehicles to make them safer and more human-like. The resulting DBM will be available on an open-source HUB to validate its feasibility, and it will also be implemented in CARLA, an open-source autonomous driving simulator.

The industry of Connected, Cooperative, and Automated Mobility (CCAM) presents important opportunities for the European Union. However, its deployment requires new tools that enable the design and analysis of autonomous vehicle components, together with their digital validation, and a common language between Tier vendors and OEM manufacturers.

One of the shortcomings arises from the lack of a validated and scientifically based Driver Behavioral Model (DBM) to cover the aspects of human driving performance, which will allow to understand and test the interaction of connected autonomous vehicles (CAVs) with other cars in a safer and predictable way from a human perspective.

Therefore, a Driver Behavioral Model could guarantee digital validation of the components of autonomous vehicles and, if incorporated into the ECUs software, could generate a more human-like response of such vehicles, thus increasing their acceptance.

To cover this need in the CCAM industry, the BERTHA project will develop a scalable and probabilistic Driver Behavioral Model (DBM), mostly based on Bayesian Belief Network, which will be key to achieving safer and more human-like autonomous vehicles.

The new DBM will be implemented on an open-source HUB, a repository that will allow industrial validation of its technological and practical feasibility, and become a unique approach for the model’s worldwide scalability.

The resulting DBM will be translated into CARLA, an open-source simulator for autonomous driving research developed by the Spanish partner Computer Vision System. The implementation of BERTHA’s DBM will use diverse demos which allow the building of new driving models in the simulator. This can be embedded in different immersive driving simulators as HAV from IBV.

BERTHA will also develop a methodology which, thanks to the HUB, will share the model with the scientific community to ease its growth. Moreover, its results will include a set of interrelated demonstrators to show the DBM approach as a reference to design human-like, easily predictable, and acceptable behaviour of automated driving functions in mixed traffic scenarios.

Partners

Instituto de Biomecanica de Valencia (ES). Institut Vedecom (FR), Universite Gustave Eiffel (FR), German Research Center for Artificial Intelligence (DE), Computer Vision Center (ES), Altran Deutschland (DE), Continental Automotive France (FR), CIDAUT Foundation (ES), Austrian Institute of Technology (AT), Universitat de València (ES), Europcar International (FR), FI Group (PT), Panasonic Automotive Systems Europe (DE) Korea Transport Institute (KOTI)

Contact

Dr.-Ing. Christian Müller

KIMBA

KI-basierte Prozesssteuerung und automatisiertes Qualitätsmanagement im Recycling von Bau- und Abbruchabfällen durch sensorbasiertes Inline-Monitoring von Korngrößenverteilungen

With 587.4 million t/a of aggregates used, the construction industry is one of the most resource-intensive sectors in Germany. By substituting primary aggregates with recycled (RC) aggregates, natural resources are conserved and negative environmental impacts such as greenhouse gas emissions are reduced by up to 85%. So far, RC building materials cover only 12.5 wt% of the aggregate demand with 73.3 million t/a. With an use of 53.9 million t/a (73.5 wt%), their use has so far been limited mainly to underground construction applications. In order to secure and expand the ecological advantages of RC building materials, it is therefore crucial that in future more demanding applications in building construction can also be covered by RC building materials. For this purpose, on the one hand, a sufficient quality of RC building materials must be guaranteed, and on the other hand, the acceptance of the customers must be ensured by a guaranteed compliance with applicable standards for building construction applications. An essential quality criterion for RC building materials is the particle size distribution (PSD) according to DIN 66165-1, which is determined in the state-of-the-art by manual sampling and sieve analyses which is time-consuming and costly. In addition, analysis results are only available with a considerable time delay. Consequently, it is neither possible to react to quality changes at an early stage, nor can treatment processes be parameterized directly to changed material flow properties. This is where the KIMBA project steps in: Instead of time-consuming and costly sampling and sieve analyses, the PSD analysis in construction waste processing plants shall be automated in the future by sensor-based inline monitoring. The RC material produced will be measured inline during the processing stage using imaging sensor technology. Subsequently, deep-learning algorithms segment the measured heap into individual particles, whose grain size is predicted and aggregated to a digital PSD. The sensor-based PSDs are then to be used intelligently to increase the quality and thus acceptance of RC building materials and hence accelerate the transition to a sustainable circular economy. Based on the proof of concept, two applications will be developed and demonstrated on a large scale: An automated quality management system continuously records the PSD of the produced RC product in order to document it to the customers and to be able to intervene in the process at an early stage in case of deviations. An AI-based assistance system is to enable adaptive control of the preparation process on the basis of sensor-based monitored PSDs and machine parameters to enable consistently high product qualities to be produced even in the event of fluctuating input qualities.

Partners

MAV Krefeld GmbH Institut für Anthropogene Stoffkreisläufe (ANTS) Deutsche Forschungszentrum für Künstliche Intelligenz (DFKI) KLEEMANN GmbH Lehrstuhl für International Production Engineering and Management (IPEM) der Universität Siegen Point 8 GmbH vero – Verband der Bau- und Rohstoffindustrie e.V Verband Deutscher Maschinen- und Anlagenbau e.V. (VDMA)

Contact

Revise-UP

Verbesserung der Prozesseffizienz des werkstofflichen Recyclings von Post-Consumer Kunststoff-Verpackungsabfällen durch intelligentes Stoffstrommanagement

At 3.2 million tonnes per year, post-consumer packaging waste represents the most significant plastic waste stream in Germany. Despite progress to date, mechanical plastics recycling still has significant potential for improvement: In 2021, only about 27 Ma.-% (1.02 million Mg/a) of post-consumer plastics could be converted into recyclates, and only about 12 Ma.-% (0.43 million Mg/a) served as substitutes for virgin plastics (Conversio Market & Strategy GmbH, 2022).

So far, mechanical plastics recycling has been limited by the high effort of manual material flow characterisation, which leads to a lack of transparency along the value chain. During the ReVise concept phase, it was shown that post-consumer material flows can be characterised automatically using inline sensor technology. The subsequent four-year ReVise implementation phase (ReVise-UP) will explore the extent to which sensor-based material flow characterisation can be implemented on an industrial scale to increase transparency and efficiency in plastics recycling.

Three main effects are expected from this increased data transparency. Firstly, positive incentives for improving collection and product qualities should be created in order to increase the quality and use of plastic recyclates. Secondly, sensor-based material flow characteristics are to be used to adapt sorting, treatment and plastics processing processes to fluctuating material flow properties. This promises a considerable increase in the efficiency of the existing technical infrastructure. Thirdly, the improved data situation should enable a holistic ecological and economic evaluation of the entire value chain. As a result, technical investments can be used in a more targeted manner to systematically optimise both ecological and economic benefits.

Our goal is to fundamentally improve the efficiency, cost-effectiveness and sustainability of post-consumer plastics recycling.

Partners

Deutsches Forschungszentrum für Künstliche Intelligenz GmbH Deutsches Institut für Normung e. V. Human Technology Center der RWTH Aachen University Hündgen Entsorgungs GmbH & Co. KG Krones AG Kunststoff Recycling Grünstadt GmbH SKZ – KFE gGmbH STADLER Anlagenbau GmbH Wuppertal Institut für Klima, Umwelt, Energie gGmbH PreZero Recycling Deutschland GmbH & Co. KG bvse – Bundesverband Sekundärrohstoffe und Entsorgung e. V. cirplus GmbH HC Plastics GmbH Henkel AG Initiative „Mülltrennung wirkt“ Procter & Gamble Service GmbH TOMRA Sorting GmbH

Contact

TWIN4TRUCKS

TWIN4TRUCKS – Digitaler Zwilling und KI in der vernetzten Fabrik für die integrierte Nutzfahrzeugproduktion, Logistik und Qualitätssicherung

Am 1. September 2022 startete das Forschungsprojekt Twin4Trucks (T4T). Darin verbinden sich wissenschaftliche Forschung und industrielle Umsetzung in einzigartiger Weise. Das Projektkonsortium besteht aus sechs Unternehmen aus Forschung und Industrie: Die Daimler Truck AG (DTAG) ist Konsortialführer des Projekts. Sie ist der größte Nutzfahrzeughersteller der Welt und mithilfe von Twin4Trucks soll ihre Produktion durch die Implementierung neuer Technologien wie Digitaler Zwillinge oder eines Digital Foundation Layer optimiert werden. Die Technologie-Initiative SmartFactory Kaiserslautern (SF-KL) und das Deutsche Forschungszentrum für Künstliche Intelligenz (DFKI) geben als visionäre Wissenschaftseinrichtungen mit Production Level 4 die Entwicklungsrichtung vor. Der IT-Dienstleister Atos ist zuständig für den Datenaustausch über Gaia-X, die Qualitätssicherung durch KI-Methoden und das Umsetzungskonzept des DFL. Infosys ist zuständig für die Netzwerkarchitektur, 5G Netzwerke und Integrationsleistungen. Das Unternehmen PFALZKOM baut eine Regional Edge Cloud auf, sowie ein Datencenter. Dazu kommen Gaia-X Umsetzung und Betriebskonzepte für Netzwerke.

Contact

Simon Bergweiler

- Simon.Bergweiler@dfki.de

- Phone: +49 631 20575 5070

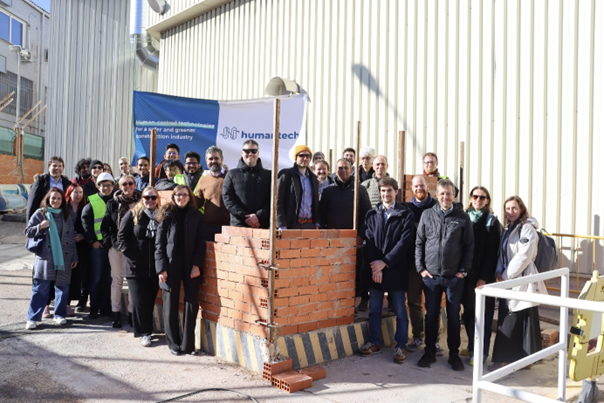

HumanTech

Human Centered Technologies for a Safer and Greener European Construction Industry

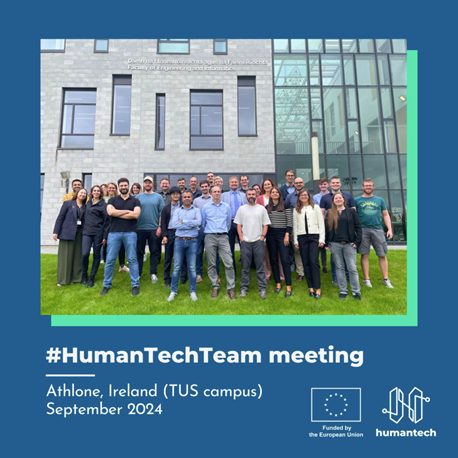

The European construction industry faces three major challenges: improve its productivity, increase the safety and wellbeing of its workforce and make a shift towards a green, resource efficient industry. To address these challenges adequately, HumanTech proposes a human-centered approach, involving breakthrough technologies such as wearables for worker safety and support, and intelligent robotic technology that can harmoniously co-exist with human workers while also contributing to the green transition of the industry.

Our aim is to achieve major advances beyond the current state-of-the-art in all these technologies, that can have a disruptive effect in the way construction is conducted.

These advances will include:

Introduction of robotic devices equipped with vision and intelligence to enable them to navigate autonomously and safely in a highly unstructured environment, collaborate with humans and dynamically update a semantic digital twin of the construction site.

Intelligent unobtrusive workers protection and support equipment ranging from exoskeletons triggered by wearable body pose and strain sensors, to wearable cameras and XR glasses to provide real-time worker localisation and guidance for the efficient and accurate fulfilment of their tasks.

An entirely new breed of Dynamic Semantic Digital Twins (DSDTs) of construction sites simulating in detail the current state of a construction site at geometric and semantic level, based on an extended BIM formulation (BIMxD)

Partners

Hypercliq IKE Technische Universität Kaiserslautern Scaled Robotics SL Bundesanstalt für Arbeitsschutz und Arbeitsmedizin Sci-Track GmbH SINTEF Manufacturing AS Acciona construccion SA STAM SRL Holo-Industrie 4.0 Software GmbH Fundacion Tecnalia Research & Innovation Catenda AS Technological University of the Shannon : Midlands Midwest Ricoh international BV Australo Interinnov Marketing Lab SL Prinstones GmbH Universita degli Studi di Padova European Builders Confederation Palfinger Structural Inspection GmbH Züricher Hochschule für Angewandte Wissenschaften Implenia Schweiz AG Kajima corporation

Contact

AuRoRas

Automotive Robust Radar Sensing

Radar sensors are very important in the automotive industry because they have the ability to directly measure the speed of other road users. The DFKI is working with our partners to develop intelligent software solutions to improve the performance of high-resolution radar sensors. We are using machine learning and deep neural networks to detect ghost targets in radar data thus improving their reliability and opens up a wide area of possibilities for highly automated driving.

Partners

ASTYX GmbH (Dr. Georg Kuschk), Lise-Meitner-Straße 2a, 85521, Ottobrunn, DE

BIT Technology Solutions gmbH (Geschäftsleitung), Gewerbering 3, 83539 Pfaffing OT Forsting, DE

Contact

VIZTA

Vision, Identification, with Z-sensing Technologies and key Applications

VIZTA project, coordinated by ST Micrelectronics, aims at developing innovative technologies in the field of optical sensors and laser sources for short to long-range 3D-imaging and to demonstrate their value in several key applications including automotive, security, smart buildings, mobile robotics for smart cities, and industry4.0. The key differentiating 12-inch Silicon sensing technologies developed during VIZTA are:

1-Innovative SPAD and lock-in pixel for Time of Flight architecture sensors. 2-Unprecedent and cost-effective NIR and RGB-Z filters on-chip solutions. 3-complex RGB+Z pixel architectures for multimodal 2D/3D imaging.

For short-range sensors : advanced VCSEL sources including wafer-level GaAs optics and associated high speed driver. These developed differentiating technologies allows the development and validation of innovative 3D imaging sensors products with the following highly integrated prototypes demonstrators:

1-High resolution (>77 000 points) time-of-flight ranging sensor module with integrated VCSEL, drivers, filters and optics. 2-Very High resolution (VGA min) depth camera sensor with integrated filters and optics.

For Medium and Long range sensing, VIZTA also adresses new LiDAR systems with dedicated sources, optics and sensors. Technology developments of sensors and emitters are carried out by leading semiconductor product suppliers (ST Microelectronics, Philips, III-V Lab) with the support of equipment suppliers (Amat, Semilab) and CEA Leti RTO.

VIZTA project also include the developement of 6 demonstrators for key applications including automotive, security, smart buildings, mobile robotics for smart cities, and industry4.0 with a good mix of industrial and academic partners (Ibeo, Veoneer, Ficosa, Beamagine, IEE, DFKI, UPC, Idemia, CEA-List, ISD, BCB, IDE, Eurecat). VIZTA consortium brings together 23 partners from 9 countries in Europe: France, Germany, Spain, Greece, Luxembourg, Latvia, Sweden, Hungary, and United Kingdom.

Partners

Universidad Politecnica Catalunya Commisariat a l Energie Atomique et aux Energies Alternatives (CEA Paris) Fundacio Eurecat STMICROELECTRONICS SA BCB Informática y Control Alter Technology TÜV Nord SA FICOMIRRORS SA Philips Photonics GmbH Applied Materials France SARL SEMILAB FELVEZETO FIZIKAI LABORATORIUM RESZVENYTARSASAG ELEKTRONIKAS UN DATORZINATNU INSTITUTS LUMIBIRD IEE S.A. IBEO Automotive Systems GmbH STMICROELECTRONICS RESEARCH & DEVELOPMENT LTD STMICROELECTRONICS SA IDEMIA IDENITY & SECURITY FRANCE Beamagine S.L. Integrated Systems Development S.A. VEONEER SWEDEN AB III-V Lab STMICROELECTRONICS (ALPS) SAS STMICROELECTRONICS GRENOBLE 2 SAS

Contact

Be-greifen

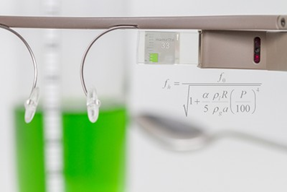

Comprehensible, interactive experiments: practice and theory in the MINT study

The project is funded by the Federal Ministry of Education and Research (BMBF). Combine tangible, manipulatable objects (“tangibles”) with advanced technologies (“Augmented Reality”) to develop new, intuitive user interfaces. Through interactive experiments, it will be possible to actively support the learning process during the MINT study and to provide the learner with more theoretical information about physics.

The project is funded by the Federal Ministry of Education and Research (BMBF). Combine tangible, manipulatable objects (“tangibles”) with advanced technologies (“Augmented Reality”) to develop new, intuitive user interfaces. Through interactive experiments, it will be possible to actively support the learning process during the MINT study and to provide the learner with more theoretical information about physics.

In the project interfaces of Smartphones, Smartwatches or Smartglasses are used. For example, a data gadget that allows you to view content through a combination of subtle head movements, eyebrows, and voice commands, and view them on a display attached above the eye. Through this casual information processing, the students are not distracted in the execution of the experiment and can access the objects and manipulate them.

A research project developed as a preliminary study demonstrates the developments. For this purpose, scientists at the DFKI and at the Technical University Kaiserslautern have developed an app that supports students and students in the determination of the relationship between the fill level of a glass and the height of the sound. The gPhysics application captures the amount of water, measures the sound frequency and transfers the results into a diagram. The app can be operated only by gestures of the head and without manual interaction. In gPhysics, the water quantity is recorded with a camera and the value determined is corrected by means of head gestures or voice commands, if required. The microphone of the Google Glass measures the sound frequency. Both information is displayed in a graph that is continuously updated on the display of Google Glass. In this way, the learners can follow the frequency curve in relation to the water level directly when filling the glass. Since the generation of the curve is comparatively fast, the learners have the opportunity to test different hypotheses directly during the interaction process by varying various parameters of the experiment.

In the project, further experiments on the physical basis of mechanics and thermodynamics are constructed. In addition, the consortium develops technologies that enable learners to discuss video and sensor recordings as well as analyze their experiments in a cloud and to exchange ideas with fellow students or to compare results.

Partners

The DFKI is a co-ordinator of five other partners in research and practice: the Technical University of Kaiserslautern, studio klv GmbH & Co. KG Berlin, University of Stuttgart, Con Partners GmbH from Bremen and Embedded Systems Academy GmbH from Barsinghausen.

Funding programm: German BMBF

- Begin: 01.07.2016

- End: 30.06.2019

Contact

SSMP News

2025

Article in IEEE Transactions on Image Processing (TIP) Journal

Our article “Resolving Symmetry Ambiguity in Correspondence-based Methods for Instance-level Object Pose Estimation” was published in the prestigious Transactions on Image Processing (TIP) Journal. The work is a collaboration of DFKI with Zhejiang Univers... Read more

2024

Invited Talk of Dr. Jason Rambach at the NEM Summit 2024

Dr. Jason Rambach, gave a talk on “Building Virtual Worlds with 3D Sensing and AI” at the NEM Summit 2024 in Brussels on the 23.10.2024. The presentation was part o... Read more

HumanTech General Assembly Meeting: Advancing AI in Construction at TUS Athlone Campus, Ireland

HumanTech at Sustainable Places 2024

Dr. Jason Rambach, coordinator of the EU Horizon Project HumanTech , participated in the “Digital Twins for Sustainable Construction” session at the Read more

3rd place in Scan-to-BIM challenge (CV4_AEC Workshop, CVPR 2024) for HumanTech project team

The team of the EU Horizon Project HumanTech , consisting of Mahdi Chamseddine and Dr. Jason Rambach from DFKI Augmented Vis... Read more

FG2024: 2 papers accepted

We are excited to share that the Augmented Vision group got two papers accepted at the IEEE conference on Automatic Face and Gesture Recognition (FG 2024), the premier international forum for research in image and video-based face, gesture, and body movement recognition. ... Read more

2nd Workshop on AI and Robotics in Construction at ERF 2024

Dr. Jason Rambach, coordinator of the EU Horizon Project HumanTech , organized the 2nd workshop on “AI and Robotics in Construction” at the European... Read more

6 papers accepted at the CVPR conference in department Augmented Vision!

We are proud to announce that the researchers of the department Augmented Vision will present 6 papers at the upcoming CVPR conference taking place Mon Jun 17th through Fri Jun 21st, 2024 at the Seattle Convention Center, Seattle, USA.

DFKI Augmented Vision researcher Mahdi Chamseddine received the Best Industrial Paper award at International Conference on Pattern Recognition Applications and Methods (ICPRAM) 2024 for the paper:

WACV 2024: 2 papers accepted

We are happy to announce that the Augmented Vision group presented 2 papers in the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV) that took place from the 4th -8th January 2024 in Waikoloa, Hawaii.

Read more

2023

EU Project BERTHA starts with participation of DFKI AV and ASR departments

The BERTHA project receives EU funding to develop a Driver Behavioral Model that will make autonomous vehicles safer and more human-like

- The project, funded by the Europea... Read more

Kick-Off-Treffen des KIMBA Forschungsvorhabens// Kick-off meeting of the KIMBA research project.

Kick-Off-Treffen des ReVise-UP Forschungsvorhabens. Kick-off meeting of the ReVise-UP research project.

DFKI Augmented Vision researchers Praveen Nathan, Sandeep Inuganti, Yongzhi Su and Jason Rambach received their 1st ... Read more

DFKI AV – Stellantis Collaboration on Radar-Camera Fusion – Papers at GCPR and EUSIPCO

DFKI Augmented Vision is collaborating with Stellantis on the topic of Radar-Camera Fusion for Automotive Object Detection using Deep Learning. Recently, two new publications were accepted to the GCPR 2023 and Read more

ICCV 2023: 4 papers accepted

We are happy to announce that the Augmented Vision group will present 4 papers in the upcoming ICCV 2023 Conference, 2-6 October, Paris, France. The IEEE/CVF International Conference in Computer Vision (ICCV) is the premier international computer vision event. Homepage: <... Read more

3rd place in Scan-to-BIM challenge (CV4_AEC Workshop, CVPR 2023) for HumanTech project team

The team of the EU Horizon Project HumanTech , consisting of Mahdi Chamseddine and Dr. Jason Rambach from DFKI Augmented Vis... Read more

Special Issue on the IEEE ARSO 2023 Conference: Human Factors in Construction Robotics

Dr. Jason Rambach, coordinator of the EU Horizon Project HumanTech co-organized a special session on “Human Factors in Construction Robotics” at the Read more

Workshop on AI and Robotics in Construction at ERF 2023

Dr. Jason Rambach, coordinator of the EU Horizon Project HumanTech co-organized a workshop on “AI and Robotics in Construction” at the European ... Read more

Article in IEEE Robotics and Automation Letter (RA-L) journal

We are happy to announce that our article “OPA-3D: Occlusion-Aware Pixel-Wise Aggregation for Monocular 3D Object Detection” was published in the prestigious IEEE Robotics and Automation Letters (RA-L) Journal. The work is a collaboration of DFKI with the... Read more

Radar Driving Activity Dataset (RaDA) Released

DFKI Augmented Vision recently released the first publicly available UWB Radar Driving Activity Dataset (RaDA), consisting of over 10k data samples from 10 different participants annotated with 6 driving activities. The dataset was recorded in the DFKI driving simulator e... Read more

2022

VIZTA Project successfully concluded after 42 months

The Augmented Vision department of DFKI participated in the VIZTA project, coordinated by ST Microelectronics, aiming at developing innovative technologies in the field of optical sensors and laser sources for short to long-range 3D-imaging and to demonstrate their value ... Read more

DFKI Augmented Vision Researchers win two awards in Object Pose Estimation challenge (BOP Challenge, ECCV 2022)

DFKI Augmented Vision researchers Yongzhi Su, Praveen Nathan and Jason Rambach received their 1st place award in the prestigious BOP Challenge 2022 in the categories Overall Best Segmentation Method and The Best BlenderProc-Trained Segmentation Method.... Read more

Kick-Off for EU Project “HumanTech”

Our Augmented Vision department is the coordinator of the new large European project "HumanTech". The Kick-Off meeting was held on July 20th, 2022, at DFKI in Kaiserslautern. Please read the whole article here: Artificia... Read more

ARTIFICIAL INTELLIGENCE FOR A SAFE AND SUSTAINABLE CONSTRUCTION INDUSTRY

Please check out the article "Artificial intelligence for a safe and sustainable construction industry (dfki.de)" concerning the new EU project HumanTech which is coordinated by Read more

Augmented Vision @CVPR 2022

DFKI Augmented Vision had a strong presence in the recent CVPR 2022 Conference held on June 19th-23rd, 2022, in New Orleans, USA. The IEEE/CVF Computer Vision and Pattern Recognition Conference (CVPR) is the premier annual computer vision event internationally. Homepage: ... Read more

Keynote Presentation by Dr. Jason Rambach in Computer Vision session of the Franco-German Research and Innovation Network event

On June 14th, 2022, Dr. Jason Rambach gave a keynote talk in the Computer Vision session of the Franco-German Research and Innovation Network event held at the Inria headquarters in Versailles, Paris, France. In the talk, an overview of the current activities of the... Read more

CVPR 2022: Two papers accepted

We are happy to announce that the Augmented Vision group will present two papers in the upcoming CVPR 2022 Conference from June 19th-23rd in New Orleans, USA. The IEEE/CVF Computer Vision and Pattern Recognition Conference (CVPR) is the premier annual computer vision even... Read more

2021

2 Papers accepted at BMVC 2021 Conference

We are happy to announce that the Augmented Vision group will present 2 papers in the upcoming BMVC 2021 Conference, 22-25 November, 2021:

The British Machine Vision Conference (BMVC) is the British Machine Vision Assoc... Read more

DFKI AV – Stellantis Collaboration on Radar-Camera Fusion – 2 publications

DFKI Augmented Vision is working with Stellantis on the topic of Radar-Camera Fusion for Automotive Object Detection using Deep Learning since 2020. The collaboration has already led to two publications, in ICCV 2021 (International Conference on Computer Vision – Read more

VIZTA Project Time-of-Flight Camera Datasets Released

As part of the research activities of DFKI Augmented Vision in the VIZTA project (https://www.vizta-ecsel.eu/), two publicly available datasets have been released and are available for download. TIMo dataset is a ... Read more

XR for nature and environment survey

On July 29th, 2021, Dr. Jason Rambach presented the survey paper “A Survey on Applications of Augmented, Mixed and Virtual Reality for Nature and Environment” at the 23rd Human Computer Interaction Conference Read more

VIZTA Project 24M Review and public summary

DFKI participates in the VIZTA project, coordinated by ST Micrelectronics, aiming at developing innovative technologies in the field of optical sensors and laser sources for short to long-range 3D-imaging and to demonstrate their value in several key applications in... Read more

Paper accepted at ICIP 2021

We are happy to announce that our paper “SEMANTIC SEGMENTATION IN DEPTH DATA : A COMPARATIVE EVALUATION OF IMAGE AND POINT CLOUD B... Read more

TiCAM Dataset for in-Cabin Monitoring released

As part of the research activities of DFKI Augmented Vision in the VIZTA project (https://www.vizta-ecsel.eu/), we have published the open-source dataset for automotive in-cabin monitoring with a wide-angle time-of-flight depth se... Read more

Presentation on Machine Learning and Computer Vision by Dr. Jason Rambach

On March 4th, 2021, Dr. Jason Rambach gave a talk on Machine Learning and Computer Vision at the GIZ (Deutsche Gesellschaft für Internationale Zusammenarbeit) workshop o... Read more

VIZTA project: 18-month public project summary released

DFKI participates in the VIZTA project, coordinated by ST Micrelectronics, aiming at developing innovative technologies in the field of optical sensors and laser sources for short to long-range 3D-imaging and to demonstrate their value in several key applications in... Read more

3 Papers accepted at VISAPP 2021

We are excited to announce that the Augmented Vision group will present 3 papers in the upcoming VISAPP 2021 Conference, February 8th-10th, 2021:

The International Conference on Computer Vision Theory and Applications ... Read more

Article at MDPI Sensors journal

We are happy to announce that our paper “SynPo-Net–Accurate and Fast CNN-Based 6DoF Object Pose Estimation Using Synthetic Training” has been accepted for publication at the MDPI Sensors journal, Special Issue Object Tracking and Motion Analysis. Sensors (ISSN 14... Read more

2020

Four papers accepted at WACV 2021

The Winter Conference on Applications of Computer Vision (WACV 2021) is IEEE’s and the PAMI-TC’s premier meeting on applications of computer vision. With its high quality and low cost, it provides an exceptional value for students... Read more

Head of SSMP Team

| Member | Position | Phone | ||

|---|---|---|---|---|

| Dr. Jason Raphael Rambach | Deputy Director | jason_raphael.rambach@dfki.de | +49 631 20575-3740 |

Members of SSMP Team

| Member | Position | Phone | ||

|---|---|---|---|---|

| Dr. Bruno Mirbach | Senior Researcher | Bruno_Walter.Mirbach@dfki.uni-kl.de | +49 631 20575 3511 | |

| Mahdi Chamseddine | Researcher | Mahdi.Chamseddine@dfki.de | +49 631 20575-3527 | |

| Pelle Thielmann | Researcher | Pelle.Thielmann@dfki.de | ||

| Sai Srinivas Jeevanandam | Researcher | Sai_Srinivas.Jeevanandam@dfki.de | ||

| Sandeep Prudhvi Krishna Inuganti | Researcher | Sandeep_Prudhvi_Krishna.Inuganti@dfki.de | ||

| Shashank Mishra | Researcher | Shashank.Mishra@dfki.de | ||

| Shreedhar Govil | Researcher | Shreedhar.Govil@dfki.de | ||

| Yu Zhou | Researcher | yu.zhou@dfki.de |

Publications of SSMP Team

2025

Resolving Symmetry Ambiguity in Correspondence-based Methods for Instance-level Object Pose Estimation

In: IEEE Transactions on Image Processing (TIP), IEEE, 3/2025.

Details

| Link 1

2024

Which Time Series Domain Shifts can Neural Networks Adapt to?

In: Proceedings of. European Signal Processing Conference (EUSIPCO-2024), IEEE, 2024.

Details

| Link 1

HiPose: Hierarchical Binary Surface Encoding and Correspondence Pruning for RGB-D 6DoF Object Pose Estimation

In: IEEE/CVF (Hrsg.). Proceedings of the. International Conference on Computer Vision and Pattern Recognition (CVPR-2024), June 17-21, Seattle, Washington, USA, IEEE/CVF, 2024.

Details

| Link 1

| Link 2

In-Domain Inversion for Improved 3D Face Alignment on Asymmetrical Expressions

In: Proceedings of the 18th IEEE International Conference on Automatic Face and Gesture Recognition. IEEE International Conference on Automatic Face and Gesture Recognition (FG-2024), May 27-31, Istanbul, Turkey, IEEE, 5/2024.

Details

| Link 1

Single Frame Semantic Segmentation Using Multi-Modal Spherical Images

In: Proceedings of. IEEE Winter Conference on Applications of Computer Vision (WACV-2024), IEEE Xplore, 2024.

Details

| Link 1

Achieving RGB-D Level Segmentation Performance From a Single ToF Camera

In: Proceedings of the. International Conference on Pattern Recognition Applications and Methods (ICPRAM-2024), February 24-26, Rome, Italy, SCITEPRESS, 2/2024.

Details

| Link 1

CaRaCTO: Robust Camera-Radar Extrinsic Calibration with Triple Constraint Optimization

In: International Conference on Pattern Recognition Applications and Methods. International Conference on Pattern Recognition Applications and Methods (ICPRAM-2024), February 24-26, Rome, Italy, SCITEPRESS, 2024.

Details

| Link 1

2023

Time-of-Flight Depth Sensing for Automotive Safety and Smart Building Applications: The VIZTA Project

In: IEEE (Hrsg.). IEEE Access (IEEE), Vol. 11, Pages 105819-105829, IEEE, 10/2023.

Details

| Link 1

U-RED: Unsupervised 3D Shape Retrieval and Deformation for Partial Point Clouds

In: IEEE/CVF (Hrsg.). Proceedings of the. International Conference on Computer Vision (ICCV-2023), October 2-6, Paris, France, IEEE/CVF, 2023.

Details

| Link 1

| Link 2

RC-BEVFusion: A Plug-In Module for Radar-Camera Bird’s Eye View Feature Fusion

In: DAGM (Hrsg.). Proceedings of. Annual Symposium of the German Association for Pattern Recognition (DAGM-2023), September 19-22, Heidelberg, BW, Germany, DAGM, 9/2023.

Details

| Link 1

| Link 2

CONFIDENCE-AWARE CLUSTERED LANDMARK FILTERING FOR HYBRID 3D FACE TRACKING

In: IEEE (Hrsg.). Proceedings of the 30th ICIP. IEEE International Conference on Image Processing (ICIP-2023), October 8-11, Kuala Lumpur, Malaysia, IEEE, 2023.

Details

| Link 1

Cross-Dataset Experimental Study of Radar-Camera Fusion in Bird’s-Eye View

In: IEEE (Hrsg.). Proceedings of the 31st European Signal Processing Conference. European Signal Processing Conference (EUSIPCO-2023), 31st, September 4-8, Helsinki, Finland, IEEE, 2023.

Details

| Link 1

Ontology-based Semantic Labeling for RGB-D and Point Cloud Datasets

In: Computing in Construction. European Conference on Computing in Construction (EC3-2023), 2023 European Conference on Computing in Construction, July 10-12, Heraklion, Crete, Greece, ISBN 978-0-701702-73-1, EC3, 2023.

Details

| Link 1

Principles of Object Tracking and Mapping

In: Andrew Yeh Ching Nee; Soh Khim Ong. Springer Handbook of Augmented Reality. Pages 53-84, Springer Handbooks, ISBN 978-3-030-67821-0, Springer, Switzerland, 1/2023.

Details

| Link 1

OPA-3D: Occlusion-Aware Pixel-Wise Aggregation for Monocular 3D Object Detection

In: IEEE Robotics and Automation Letters (RA-L), Vol. 8, Pages 1327-1334, IEEE, 3/2023.

Details

| Link 1

Driving Activity Recognition Using UWB Radar and Deep Neural Networks

In: Sensors - Open Access Journal (Sensors), Vol. 23, No. 2, Pages 1-15, MDPI, 1/2023.

Details

| Link 1

| Link 2

2022

Editorial – Advanced Scene Perception for Augmented Reality

Journal of Imaging (MDPI J) Special Issue Advanced Scene Perception for Augmented Reality MDPI 10/2022 .

Details

| Link 1

Unsupervised Anomaly Detection from Time-of-Flight Depth Images

CVPR Workshop on Perception Beyond the Visible Spectrum. CVPR Workshop on Perception Beyond the Visible Spectrum (PBVS-2022) befindet sich International Conference on Computer Vision and Pattern Recognition June 19-20 United States IEEE Computer Society 2022 .

Details

| Link 1

TIMo—A Dataset for Indoor Building Monitoring with a Time-of-Flight Camera

Sensors - Open Access Journal (Sensors) 22 11 Seiten 3992-4005 MPDI Basel 5/2022 .

Details

| Link 1

TICaM: A Time-of-flight In-car Cabin Monitoring Dataset

British Machine Vision Conference (Hrsg.). Proceedings of the. British Machine Vision Conference (BMVC-2021) Online BMVA 2021 .

Details

| Link 1

PlaneRecNet: Multi-Task Learning with Cross-Task Consistency for Piece-Wise Plane Detection and Reconstruction from a Single RGB Image

British Machine Vision Conference. British Machine Vision Conference (BMVC-2021) November 22-25 United Kingdom British Machine Vision Conference 11/2021 .

Details

| Link 1

ZebraPose: Coarse to Fine Surface Encoding for 6DoF Object Pose Estimation

In: IEEE/CVF. International Conference on Computer Vision and Pattern Recognition (CVPR-2022), June 19-24, New Orleans, Louisiana, USA, IEEE/CVF, 2022.

Details

| Link 1

Fusion Point Pruning for Optimized 2D Object Detection with Radar-Camera Fusion

In: 2022 Proceedings of the. IEEE Winter Conference on Applications of Computer Vision (WACV-2022), January 4-8, Hawaii, HI, USA, IEEE, 2022.

Details

| Link 1

Nonlinear Optimization of Light Field Point Cloud

In: Academic Editor Denis Laurendeau (Hrsg.). Sensors - Open Access Journal (Sensors), Vol. 22(3), Pages 814-829, MDPI, 1/2022.

Details

| Link 1

Unsupervised Image-to-Image Translation: A Review

In: Sensors - Open Access Journal (Sensors), Vol. 2022, 22, 8540, MDPI, 2022.

Details

| Link 1

2021

To Drive or to Be Driven? The Impact of Autopilot, Navigation System, and Printed Maps on Driver’s Cognitive Workload and Spatial Knowledge

In: ISPRS International Journal of Geo-Information, Vol. 10, No. 10, Pages 1-19, MDPI, 10/2021.

Details

| Link 1

Deployment of Deep Neural Networks for Object Detection on Edge AI Devices with Runtime Optimization

In: Proceedings of the IEEE International Conference on Computer Vision Workshops - ERCVAD Workshop on Embedded and Real-World Computer Vision in Autonomous Driving. International Conference on Computer Vision (ICCV-2021), October 11-17, Online/Virtual, IEEE, 2021.

Details

| Link 1

Visual SLAM with Graph-Cut Optimized Multi-Plane Reconstruction

IEEE. IEEE International Symposium on Mixed and Augmented Reality (ISMAR-2021) October 4-8 Bari Italy IEEE 2021 .

Details

| Link 1

SEMANTIC SEGMENTATION IN DEPTH DATA : A COMPARATIVE EVALUATION OFIMAGE AND POINT CLOUD BASED METHODS

Proceedings of ICIP. IEEE International Conference on Image Processing (ICIP-2021) 28th IEEE International Conference on Image Processing (IEEE - ICIP) September 19-22 Anchorage, Alaska Alaska United States IEEE 2021 .

Details

| Link 1

Mixed reality applications in urology: Requirements and future potential

Elsevier (Hrsg.). Annals of Medicine and Surgery 66 Seiten 1-6 Elsevier 2021 .

Details

| Link 1

PlaneSegNet: Fast and Robust Plane Estimation Using a Single-stage Instance Segmentation CNN

IEEE. IEEE International Conference on Robotics and Automation (ICRA-2021) May 30-June 5 Xi'an China IEEE 2021 .

Details

| Link 1

An Adversarial Training based Framework for Depth Domain Adaptation

Proceedings of the 16th VISAPP. International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP-2021) 16th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications February 8-10 online Springer 2021 .

Details

| Link 1

A Survey on Applications of Augmented, Mixed and Virtual Reality for Nature and Environment

Proceedings of the 23rd HCI. International Conference on Human-Computer Interaction (HCII-2021) July 24-29 Online United States Springer 2021 .

Details

OFFSED: Off-Road Semantic Segmentation Dataset

VISAPP 2021 Proceedings. International Conference on Computer Vision Theory and Applications (VISAPP-2021) 16th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications February 8-10 Online ISBN TBA SCITEPRESS Digital Library 2021 .

Details

| Link 1

SynPo-Net–Accurate and Fast CNN-Based 6DoF Object Pose Estimation Using Synthetic Training

In: SangMin Yoon (Hrsg.). Sensors - Open Access Journal (Sensors), Vol. 21(1) - Special Issue "Object Tracking and Motion Analysis", Page 300, MDPI, 2021.

Details

| Link 1

2020

TGA: Two-level Group Attention for Assembly State Detection

In: Proceedings of the 19th IEEE ISMAR. IEEE International Symposium on Mixed and Augmented Reality (ISMAR-2020), November 9-13, Recife/Porto de Galinhas, Brazil, IEEE, 2020.

Details

| Link 1

Ghost Target Detection in 3D Radar Data using Point Cloud based Deep Neural Network

International Conference on Pattern Recognition. International Conference on Pattern Recognition (ICPR-2020) January 12-15 Milano Italy IEEE 2021 .

Details

| Link 1

Learning Priors for Augmented Reality Tracking and Scene Understanding

-Thesis Technische Universität Kaiserslautern ISBN 978-3-8439-4555-4 Dr.Hut München 9/2020 .

Details

2019

Deep Multi-State Object Pose Estimation for Augmented Reality Assembly

In: Proceedings of the 18th IEEE ISMAR. IEEE International Symposium on Mixed and Augmented Reality (ISMAR-2019), October 14-18, Beijing, China, IEEE, 2019.

Details

| Link 1

SlamCraft: Dense Planar RGB Monocular SLAM

Proceedings of. IAPR Conference on Machine Vision Applications (MVA-2019) May 27-31 Tokyo Japan IAPR 2019 .

Details

| Link 1

Augmented Reality in Physics education: Motion understanding using an Augmented Airtable

EuroVR (Hrsg.). European Association on Virtual and Augmented Reality. EuroVR (EuroVR-2019) October 23-25 Tallin Estonia Springer 2019 .

Details

| Link 1