We are happy to announce that our paper “Fast Gravitational Approach for Rigid Point Set Registration With Ordinary Differential Equations” has been accepted for publication in the IEEE Access Journal (Impact Factor: 3.745).

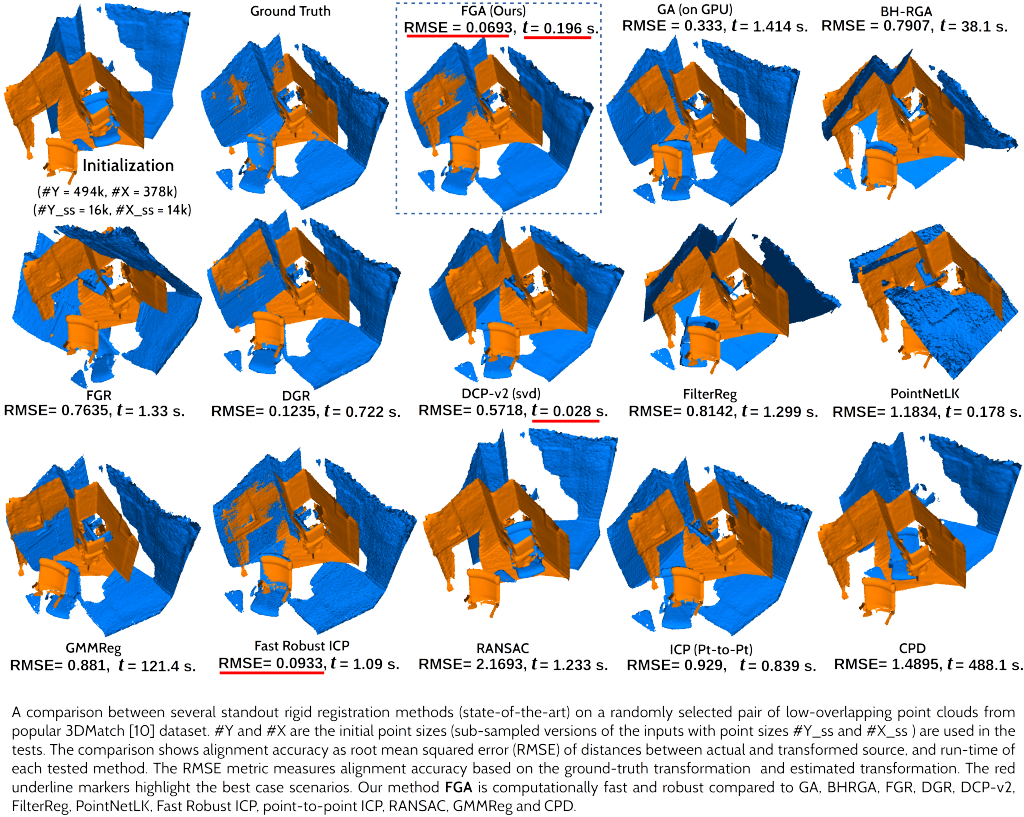

Abstract: This article introduces a new physics-based method for rigid point set alignment called Fast Gravitational Approach (FGA). In FGA, the source and target point sets are interpreted as rigid particle swarms with masses interacting in a globally multiply-linked manner while moving in a simulated gravitational force field. The optimal alignment is obtained by explicit modeling of forces acting on the particles as well as their velocities and displacements with second-order ordinary differential equations of n-body motion. Additional alignment cues can be integrated into FGA through particle masses. We propose a smooth-particle mass function for point mass initialization, which improves robustness to noise and structural discontinuities. To avoid the quadratic complexity of all-to-all point interactions, we adapt a Barnes-Hut tree for accelerated force computation and achieve quasilinear complexity. We show that the new method class has characteristics not found in previous alignment methods such as efficient handling of partial overlaps, inhomogeneous sampling densities, and coping with large point clouds with reduced runtime compared to the state of the art. Experiments show that our method performs on par with or outperforms all compared competing deep-learning-based and general-purpose techniques (which do not take training data) in resolving transformations for LiDAR data and gains state-of-the-art accuracy and speed when coping with different data.

Authors: Sk Aziz Ali, Kerem Kahraman, Christian Theobalt, Didier Stricker, Vladislav Golyanik

Link to the paper: https://ieeexplore.ieee.org/document/9442679