We are happy to announce that our paper “Generative View Synthesis: From Single-view Semantics to Novel-view Images” has been accepted for publication at the Thirty-fourth Conference on Neural Information Processing Systems (NeurIPS 2020), which will take place online from December 6th to 12th. NeurIPS is the top conference in the field of Machine Learning. Our paper was accepted from 9454 submissions as one of 1900 (acceptance rate: 20.1%).

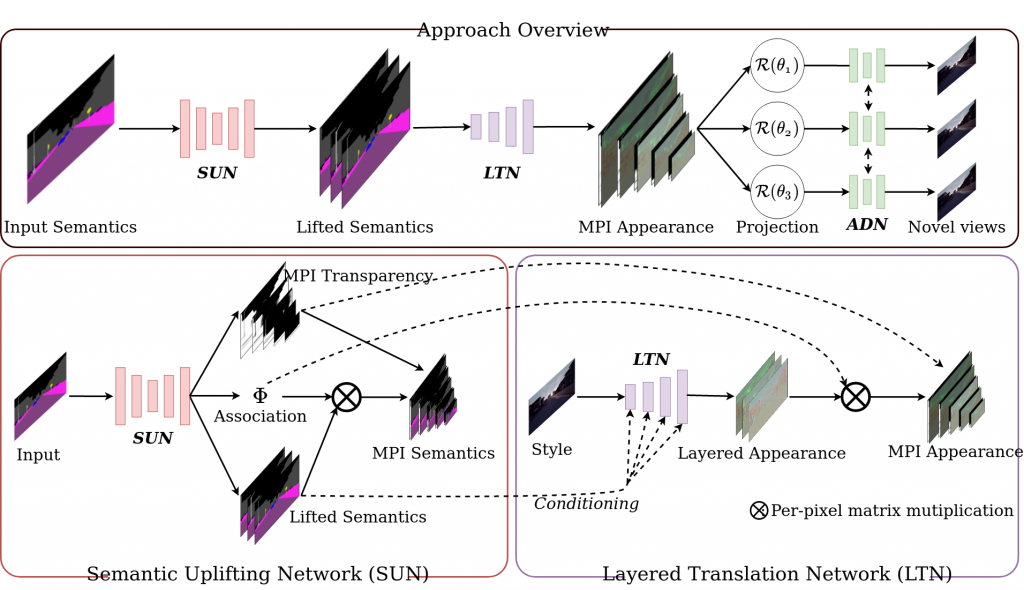

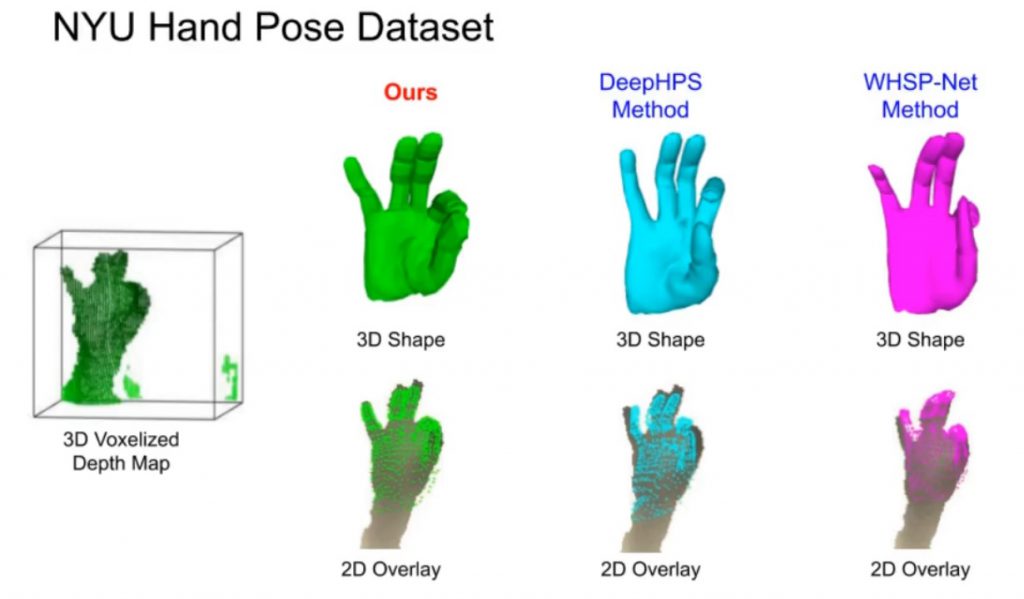

Abstract: Content creation, central to applications such as virtual reality, can be a tedious and time-consuming. Recent image synthesis methods simplify this task by offering tools to generate new views from as little as a single input image, or by converting a semantic map into a photorealistic image. We propose to push the envelope further, and introduce Generative View Synthesis (GVS), which can synthesize multiple photorealistic views of a scene given a single semantic map. We show that the sequential application of existing techniques, e.g., semantics-to-image translation followed by monocular view synthesis, fail at capturing the scene’s structure. In contrast, we solve the semantics-to-image translation in concert with the estimation of the 3D layout of the scene, thus producing geometrically consistent novel views that preserve semantic structures. We first lift the input 2D semantic map onto a 3D layered representation of the scene in feature space, thereby preserving the semantic labels of 3D geometric structures. We then project the layered features onto the target views to generate the final novel-view images. We verify the strengths of our method and compare it with several advanced baselines on three different datasets. Our approach also allows for style manipulation and image editing operations, such as the addition or removal of objects, with simple manipulations of the input style images and semantic maps respectively.

Authors: Tewodros Amberbir Habtegebrial, Varun Jampani, Orazio Gallo, Didier Stricker

Please find our paper here.

Please also check out our video on YouTube.

Please contact Didier Stricker for more information.