ARinfuse is an European project funded under Erasmus+, the EU’s programme to support education, training, youth and sport in Europe. The objective or ARinfuse is to support individuals in acquiring and developing basic skills and key competences within the field of geoinformatics and utility infrastructure, in order to foster employability. This objective is addressed through the development of new learning modules where Augmented Reality technologies are merged with geoinformatics and applied within the utility infrastructure sector. The developed digital learning content and tools will be implemented in university programs as well as in vocational training programs, and will be made available as Open Educational Resources, open textbooks and Open Source Educational Software.

The Augmented Vision department will contribute to the ARinfuse project by sharing its knowledge and expertise in Augmented Reality technologies for the energy and utilities sector, gained mainly during the European project LARA. Besides DFKI, following partners are collaborating in the project: GeoImaging Ltd (Cyprus), Novogit AB (Sweden), the Cyprus University of Technology (Cyprus), the GISIG association (Italy), the Sewerage Board of Nicosia (Cyprus), and the Flanders Environment Agency (VMM, Belgium).

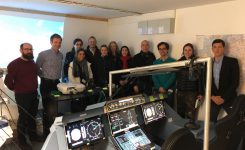

On December 19th, 2018, the project was officially launched during a kick-off meeting in Nicosia, Cyprus, where the partners started to work on educational and training material and on the specification of the software modules.

Contact person: Dr. Alain Pagani

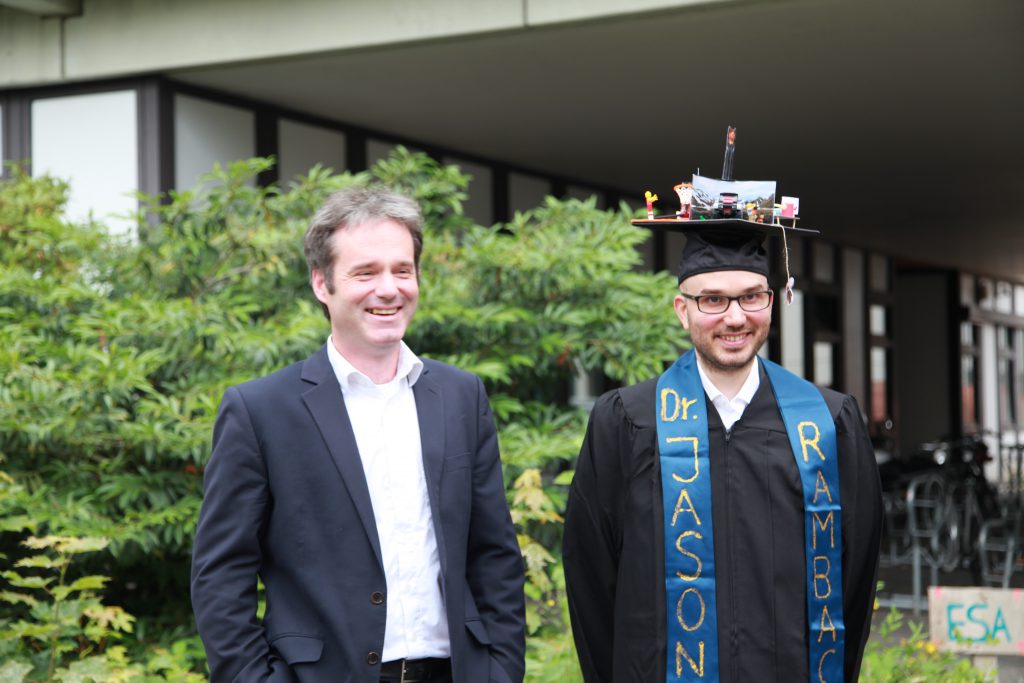

Picture: The ARinfuse partners at the kick-off event. From left to right: Alain Pagani (DFKI), Elena Valari (GeoImaging), Diofantos Hadjimitsis (CUT), Kiki Charalambus (Sewage Board Nicosia), Konstantinos Smagas (GeoImaging), Katleen Miserez (VMM), Mario Tzouvaras (CUT), Andreas Christofe (CUT), Anders Ostman (Novogit), Aristodemos Anastasiades (GeoImaging), Giorgio Saio (GISIG).