The recent advancements in Deep learning has lead to new interesting applications such as analyzing human motion and activities in recorded videos. The analysis covers from simple motion of humans walking, performing exercises to complex motions such as playing sports.

The athlete’s performance can be easily captured with a fixed camera for sports like tennis, badminton, diving, etc. The large availability of low cost cameras in handheld devices has further led to common place solution to record videos and analyze an athletes performance. Although the sports trainers can provide visual feedback by playing recorded videos, it is still hard to measure and monitor the performance improvement of the athlete. Also, the manual analysis of the obtained footage is a time-consuming task which involves isolating actions of interest and categorizing them using domain-specific knowledge. Thus, the automatic interpretation of performance parameters in sports has gained a keen interest.

Competitive diving is one of the well recognized aquatic sport in Olympics in which a person dives from a platform or a springboard and performs different classes of acrobatics before descending into the water. These classes are standardized by international organization Fédération Internationale de Natation (FINA). The differences in the acrobatics performed in various classes of diving are very subtle. The difference arises in the duration which starts with the diver standing on a diving platform or a springboard and ends at the moment he/she dives into the water. This is a challenging task to model especially due to involvement of rapid changes and requires understanding of long-term human dynamics. Further, the model must be sensitive to subtle changes in body pose over a large number of frames to determine the correct classification.

In order to automate this kind of task, three challenging sub-problems are often encountered: 1) temporally cropping events/actions of interest from continuous video; 2) tracking the person of interest even though other divers and bystanders may be in view; and 3) classifying the events/actions of interest.

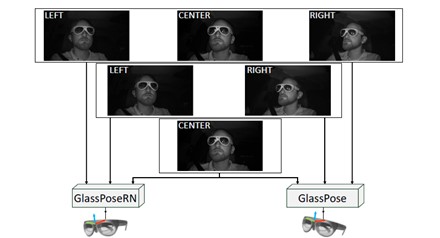

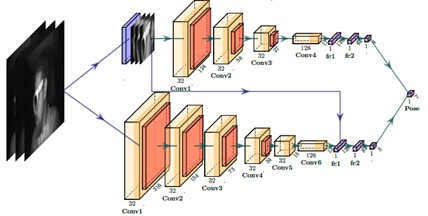

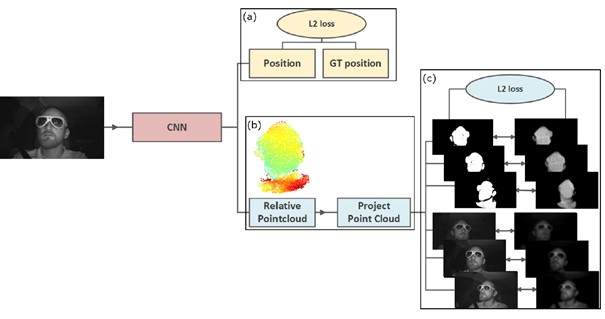

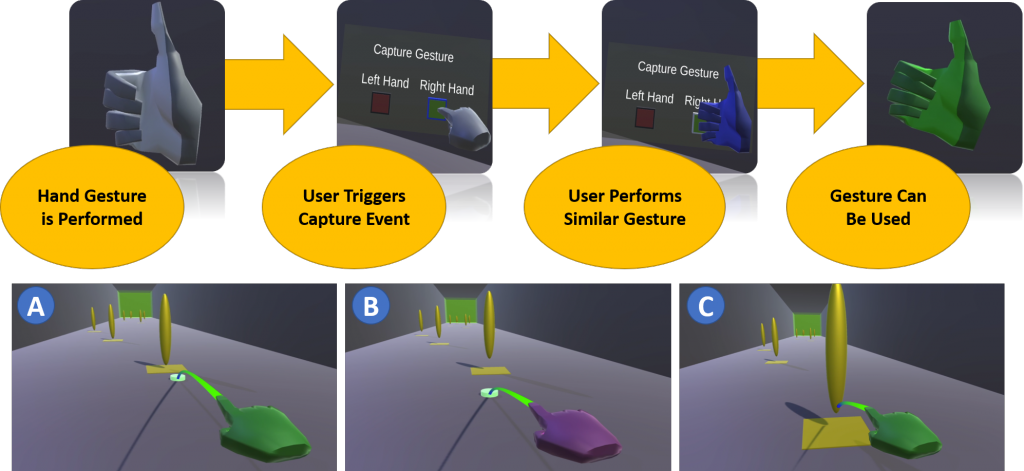

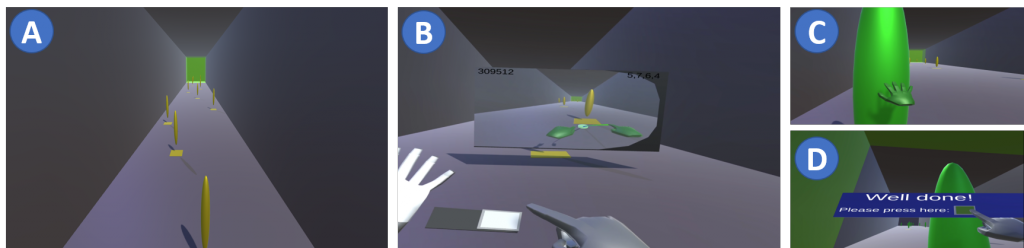

We are developing a solution in co-operation with Institut für Angewandte Trainingswissenshaft in Leipzig (IAT) to tackle the three subproblems. We work towards a complete parameter tracking solution based on monocular markerless human body motion tracking using only a mobile device (tablet or mobile phone) as training support tool to the overall diving action analysis. The techniques proposed, can be generalized to video footage recorded from other sports.

Contact person: Dr. Bertram Taetz, Pramod Murthy