The Augmented Vision department of DFKI participated in the VIZTA project, coordinated by ST Microelectronics, aiming at developing innovative technologies in the field of optical sensors and laser sources for short to long-range 3D-imaging and to demonstrate their value in several key applications including automotive, security, smart buildings, mobile robotics for smart cities, and industry4.0.

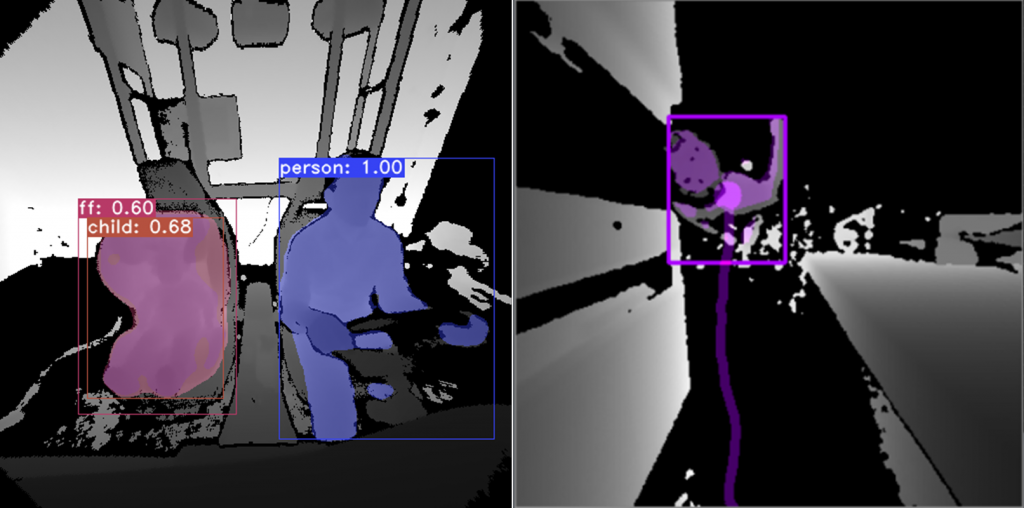

The final project review was successfully completed in Grenoble, France on November 17th-18th, 2022. The schedule included presentations on the achievements of all partners as well as live demonstrators of the developed technologies. DFKI presented their smart building person detection demonstrator based on a top-down view from a Time-of-flight (ToF) camera, developed in cooperation with the project partner IEE. A second demonstrator, showing an in-cabin monitoring system based on a wide-field-of-view, which is installed in DFKIs lab has been presented in a video.

During VIZTA, several key results were obtained at DFKI on the topics of in-car and smart building monitoring including:

- 7 peer reviewed publications in conferences and journals

- 2 publicly available datasets (with over 140 downloads so far) available at https://vizta-tof.kl.dfki.de/

- A live demonstrator of person detection https://www.youtube.com/watch?v=ItCj0K99hlI

https://www.linkedin.com/company/vizta-ecsel-project/

Contact: Dr. Jason Rambach, Dr. Bruno Mirbach