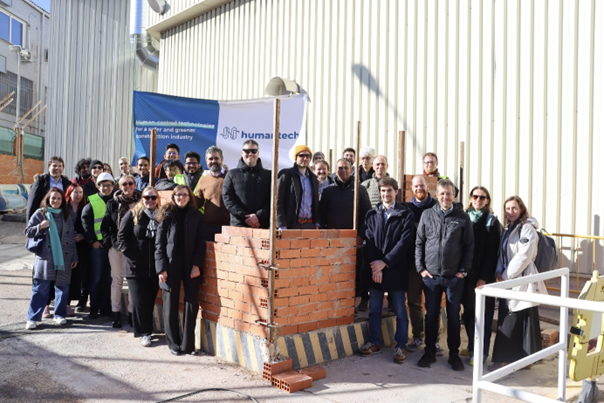

Elgoibar (Spain), 20 November 2025 – The ShieldBot project – Next-Generation Robotics for Sustainable and Efficient Thermal Shielding of Buildings – officially launched with a kick-off meeting on 4-5 of November 2025 at the premises of IDEKO Research Centre, the project coordinator, in Elgoibar (Basque Country), Spain.

Funded by the Horizon Europe programme with EUR 4 million and set to run over three years, ShieldBot brings together 11 partners from six European countries. The consortium ambitions to revolutionise how buildings are constructed, renovated, and maintained through the use of advanced, sustainable robotics.

In response to Europe’s growing demand for energy-efficient and sustainable buildings, ShieldBot aims to bridge the gap between traditional construction methods and modern sustainability standards. The project will develop and validate a new generation of robotic systems specifically designed for the construction, renovation, and maintenance of buildings, enabling greater efficiency, safety, and environmental performance.

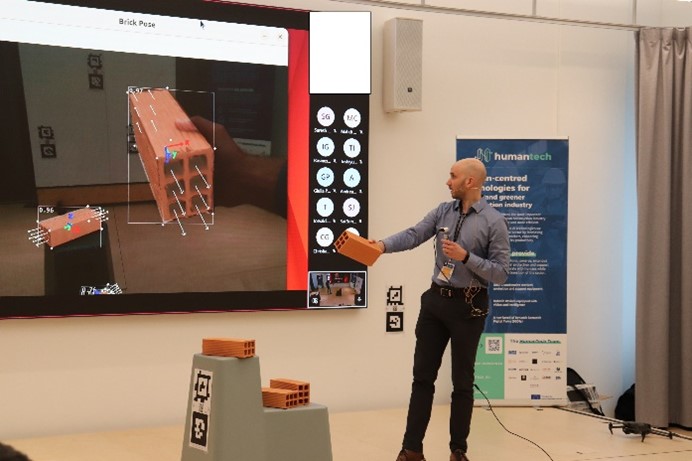

At its core, ShieldBot focuses on three key robotic platforms: Façade-ShieldBot, which installs thermal shielding on building façades to improve insulation and reduce energy consumption; Inner-ShieldBot, designed for internal construction work to enhance the thermal performance of walls and ceilings, and Inspection-ShieldBot, equipped with advanced sensors to assess exteriors, identify structural or insulation needs, and ensure long-term building integrity.

These robotic systems are supported by Digi-Shield, a digital twin platform that aggregates and analyses data from on-site operations, enabling real-time decision-making and precise project planning. Moreover, the use of Eco-Shield materials, innovative, eco-friendly insulation solutions, will further minimise the carbon footprint of construction activities.

ShieldBot’s vision extends beyond automation. A strong emphasis is placed on human–robot collaboration, ensuring that technology complements human expertise rather than replacing it. This synergy will enhance workforce safety, productivity, and efficiency, while supporting the transition towards greener and smarter construction processes.

The project’s activities will be structured across three main research areas: disruptive robotic solutions for the construction industry, advanced robot functionalities for construction and renovation works, and advanced construction site technologies. In the context of these research areas, three demonstration cases will be carried out: interior wall and ceiling construction in office buildings, inspection and maintenance of exterior building façades, and exterior façade insulation of multi-family dwellings.

The ShieldBot consortium in charge of demonstrating the three use cases unites expertise from leading research institutions, universities, and industrial partners across Europe, coordinated by IDEKO (Spain). They are: Deutsches Forschungszentrum für Künstliche Intelligenz GmbH – DFKI (Germany), University of Nottingham – Rolls-Royce UTC (UK), Nantes Université (France), CNRS – LS2N Laboratory (France), Robotnik Automation SL (Spain), Bouygues Construction SA (France), Rheinland-Pfälzische Technische Universität – RPTU (Germany), Neo-Eco Development (France), Neo-Eco Ukraine LLC (Ukraine), and MTÜ AUSTRALO Alpha Lab (Estonia).

By combining cutting-edge robotics, artificial intelligence, and sustainable materials, ShieldBot will set a new benchmark for efficiency and environmental responsibility in construction. The project’s outcomes are expected to contribute significantly to the European Green Deal’s objectives and the broader digital and green transformation of the built environment.

Contact: Dr. Jason Rambach