Alt-Text: Teilnehmer des Kick-Off-Treffens des ReVise-UP Forschungsvorhabens stehen vor dem Bergbaugebäude der RWTH Aachen University. // Participants of the kick-off meeting of the ReVise-UP research project stand in front of the mining building of RWTH Aachen University.

Deutsche Version

Forschungsvorhaben „ReVise-UP“ zur Verbesserung der Prozesseffizienz des werkstofflichen Kunststoffrecyclings mittels Sensortechnik gestartet

Im September 2023 startete das vom BMBF geförderte Forschungsvorhaben ReVise-UP („Verbesserung der Prozesseffizienz des werkstofflichen Recyclings von Post-Consumer Kunststoff-Verpackungsabfällen durch intelligentes Stoffstrommanagement – Umsetzungsphase“). In der vierjährigen Umsetzungsphase soll die Transparenz und Effizienz des werkstofflichen Kunststoffrecyclings durch Entwicklung und Demonstration sensorbasierter Stoffstromcharakterisierungsmethoden im großtechnischen Maßstab gesteigert werden.

Auf Basis der durch Sensordaten erzeugten Datentransparenz soll das bisherige Kunststoffrecycling durch drei Effekte verbessert werden: Erstens sollen durch die Datentransparenz positive Anreize für verbesserte Sammel- und Produktqualitäten und damit gesteigerte Rezyklatmengen und -qualitäten geschaffen werden. Zweitens sollen sensorbasiert erfasste Stoffstromcharakteristika dazu genutzt werden, Sortier-, Aufbereitungs- und Kunststoffverarbeitungsprozesse auf schwankende Stoffstromeigenschaften adaptieren zu können. Drittens soll die verbesserte Datenlage eine ganzheitliche ökologische und ökonomische Bewertung der Wertschöpfungskette ermöglichen.

An ReVise-UP beteiligen sich insgesamt 18 Forschungsinstitute, Verbände und Industriepartner. Das Bundesministerium für Bildung und Forschung (BMBF) fördert ReVise-UP im Rahmen der Förderrichtlinie „Ressourceneffiziente Kreislaufwirtschaft – Kunststoffrecyclingtechnologien (KuRT)” mit 3,92 Mio. €.

Weitere Informationen zu ReVise-UP finden sich unter: https://www.ants.rwth-aachen.de/cms/IAR/Forschung/Aktuelle-Forschungsprojekte/~bdueul/ReVise-UP/

Verbundpartner in ReVise-UP sind:

- Institut für Anthropogene Stoffkreisläufe der RWTH Aachen University (Konsortialleiter)

- Deutsches Forschungszentrum für Künstliche Intelligenz GmbH

- Deutsches Institut für Normung e. V.

- Human Technology Center der RWTH Aachen University

- Hündgen Entsorgungs GmbH & Co. KG

- Krones AG

- Kunststoff Recycling Grünstadt GmbH

- SKZ – KFE gGmbH

- STADLER Anlagenbau GmbH

- Wuppertal Institut für Klima, Umwelt, Energie gGmbH

- PreZero Recycling Deutschland GmbH & Co. KG

Als assoziierte Partner wird ReVise-UP unterstützt von:

- bvse – Bundesverband Sekundärrohstoffe und Entsorgung e. V.

- cirplus GmbH

- HC Plastics GmbH

- Henkel AG

- Initiative „Mülltrennung wirkt“

- Procter & Gamble Service GmbH

- TOMRA Sorting GmbH

Kontakt: Dr. Jason Rambach , Dr. Bruno Mirbach

English version

Research project “ReVise-UP” started to improve the process efficiency of mechanical plastics recycling using sensor technology

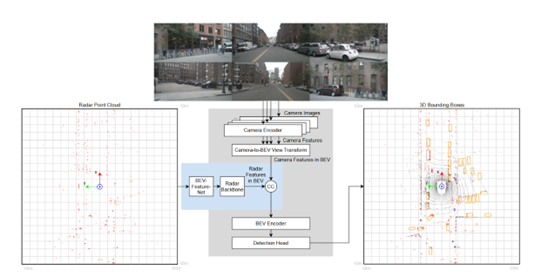

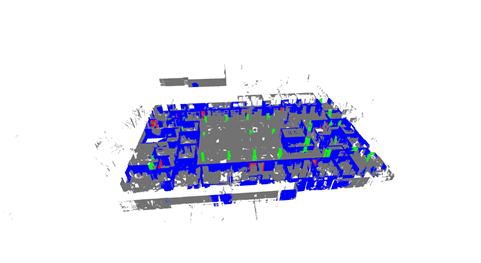

In September 2023, the BMBF-funded research project ReVise-UP (“Improving the process efficiency of mechanical recycling of post-consumer plastic packaging waste through intelligent material flow management – implementation phase”) started. In the four-year implementation phase, the transparency and efficiency of mechanical plastics recycling is to be increased by developing and demonstrating sensor-based material flow characterization methods on an industrial scale.

Based on the data transparency generated by sensor data, the current plastics recycling shall be improved by three effects: First, data transparency is intended to create positive incentives for improved collection and product qualities and thus increased recyclate quantities and qualities. Second, sensor-based material flow characteristics are to be used to adapt sorting, treatment and plastics processing processes to fluctuating material flow properties. Third, the improved data situation should enable a holistic ecological and economic evaluation of the value chain.

A total of 18 research institutes, associations and industrial partners are participating in ReVise-UP. The German Federal Ministry of Education and Research (BMBF)v is funding ReVise-UP with €3.92 million as part of the funding guideline “Resource-efficient recycling management – plastics recycling technologies (KuRT)”.

More information about ReVise-UP can be found at: https://www.ants.rwth-aachen.de/cms/IAR/Forschung/Aktuelle-Forschungsprojekte/~bdueul/ReVise-UP/?lidx=1

Project partners in ReVise-UP are:

- Department of Anthropogenic Material Cycles Stoffkreisläufe of RWTH Aachen University (consortium lead)

- Deutsches Forschungszentrum für Künstliche Intelligenz GmbH

- Deutsches Institut für Normung e. V.

- Human Technology Center of RWTH Aachen University

- Hündgen Entsorgungs GmbH & Co. KG

- Krones AG

- Kunststoff Recycling Grünstadt GmbH

- SKZ – KFE gGmbH

- STADLER Anlagenbau GmbH

- Wuppertal Institut für Klima, Umwelt, Energie gGmbH

- PreZero Recycling Deutschland GmbH & Co. KG

Associated partners in ReVise-UP are:

- bvse – Bundesverband Sekundärrohstoffe und Entsorgung e. V.

- cirplus GmbH

- HC Plastics GmbH

- Henkel AG

- Initiative „Mülltrennung wirkt“

- Procter & Gamble Service GmbH

- TOMRA Sorting GmbH

Contact: Dr. Jason Rambach , Dr. Bruno Mirbach