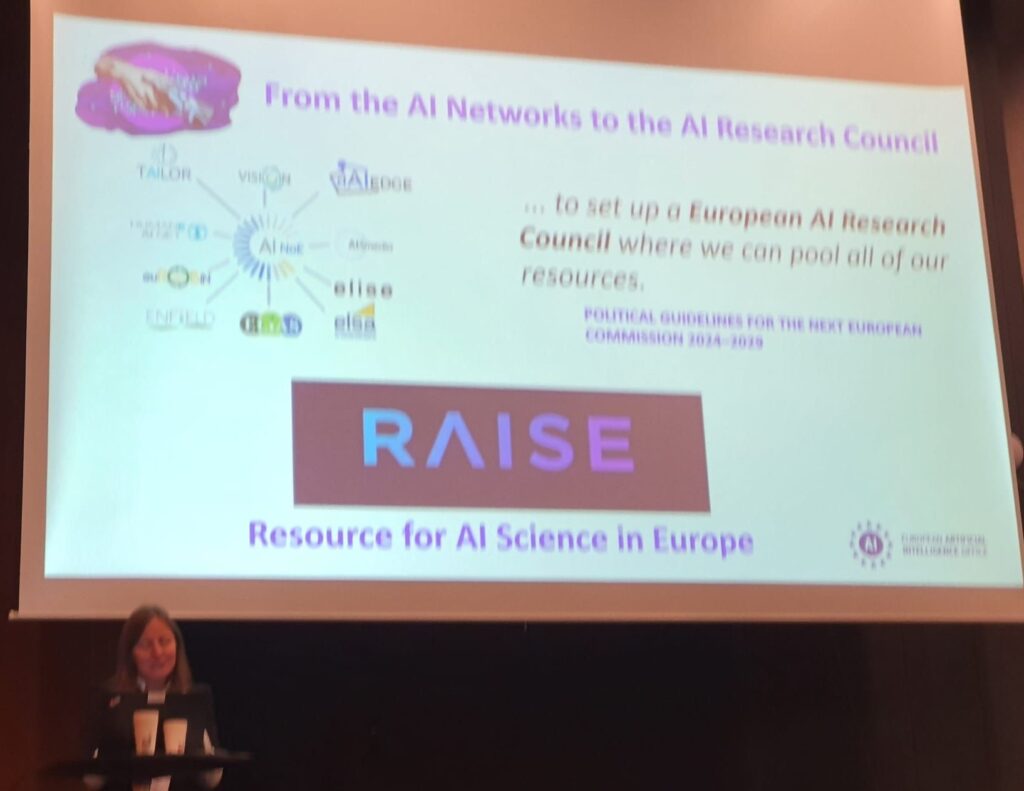

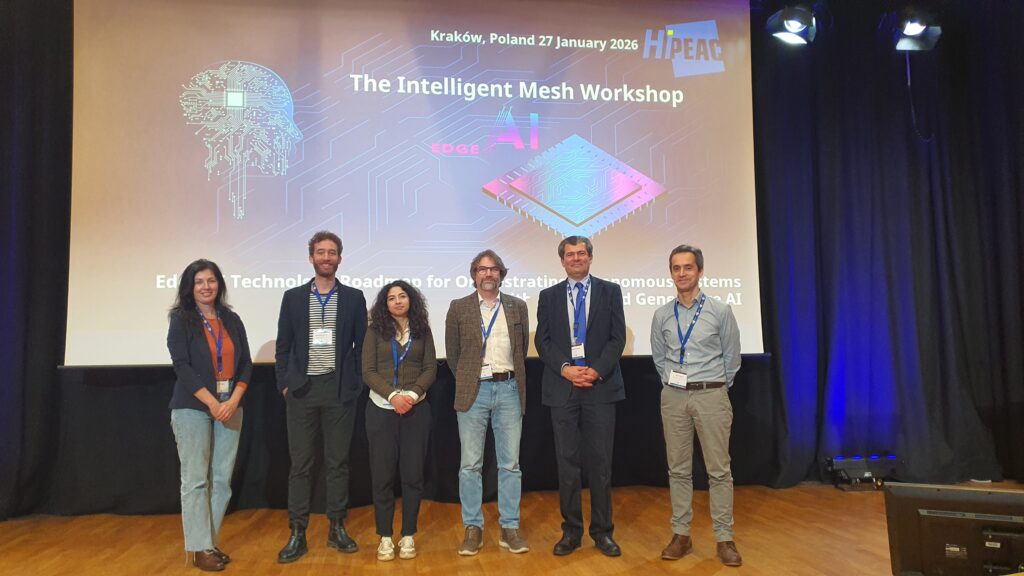

The Network of Excellence dAIEDGE organized two strategic workshops at the High Performance Embedded Architectures and Compilers Conference HiPEAC 2026, which took place in Krakow, Poland from January 26 to 28. The events brought together leading researchers, industry representatives and European initiatives to discuss the future of distributed, autonomous and trustworthy edge AI systems.

The workshop “The Intelligent Mesh: Edge AI Technology Roadmap for Orchestrating Autonomous Systems with Agentic and Generative AI”, co-organized by Ovidiu Vermesan from SINTEF, Alain Pagani from DFKI, Marcello Coppola from ST Microelectronics and Fabian Chersi from CEA, focused on the next generation of edge AI architectures. Discussions addressed heterogeneous hardware platforms, edge accelerators, neuromorphic approaches and optimized AI frameworks, as well as Small Language Models and Vision Language Models tailored for embedded systems. A central theme was agentic AI at the edge and the vision of an intelligent mesh of autonomous systems capable of collaboration and orchestration. The workshop contributed to shaping a European roadmap for secure, sovereign and scalable edge AI.

The second workshop, “Sustainable and Trustworthy Edge AI for Robotics”, was co-organized by the Networks of Excellence dAIEDGE, euROBIN, ELIAS and ENFIELD. It featured keynote talks by Maximilian Durner from DLR, Jean Marc Bonnefous from TCS, Georgios Spathoulas from NTNU and Eyup Kun from KU Leuven, and included two technical sessions on efficient, regulation-aware and human-centered AI for robotic systems. The workshop concluded with a panel discussion moderated by Alain Pagani on aligning European lighthouse strategies for sustainable, trustworthy and efficient AI.

Together, the two workshops highlighted dAIEDGE’s leading role in fostering collaboration across European AI networks and in advancing a coordinated strategy for next generation edge intelligence.

Coordinator: Dr. Alain Pagani

Project Manager: Dr. Mohamed Selim