The Augmented Vision department had a strong presence at the IEEE Computer Vision and Pattern Recognition CVPR 2025 conference with contributions to the main conference, workshops, and challenges:

Main Conference Paper

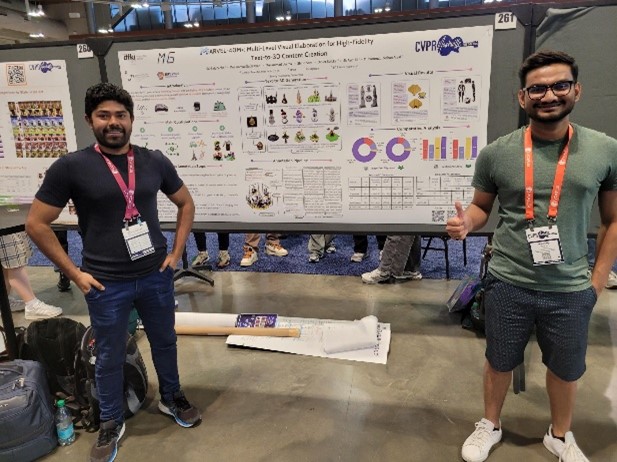

MARVEL-40M+: Multi-Level Visual Elaboration for High-Fidelity Text-to-3D Content Creation

By: Sankalp Sinha, Mohammad Sadil Khan, Muhammad Usama, Shino Sam, Didier Stricker, Dr. Sk Aziz Ali, Muhammad Zeshan Afzal

Project: https://sankalpsinha-cmos.github.io/MARVEL/

Workshop Papers

ToF-360: A Panoramic Time-of-Flight RGB-D Dataset for Single-Capture Indoor Semantic 3D Reconstruction

By: Hideaki Kanayama, Mahdi Chamseddine, Suresh Guttikonda, So Okumura, Soichiro Yokota, Didier Stricker, Jason Rambach

Dataset: https://huggingface.co/datasets/COLE-Ricoh/ToF-360

CACP: Context-Aware Copy-Paste to Enrich Image Content for Data Augmentation

By: Qiushi Guo, Shaoxiang Wang, Chun-Peng Chang, Jason Rambach

PDF:https://www.dfki.de/fileadmin/user_upload/import/15782_cacp_cvpr_workshop.pdf

Towards Unconstrained 2D Pose Estimation of the Human Spine

By: Muhammad Saif Ullah Khan, Stephan Krauß, Didier Stricker

PDF: https://www.dfki.de/fileadmin/user_upload/import/15804_2025116268.pdf

Challenges & Awards

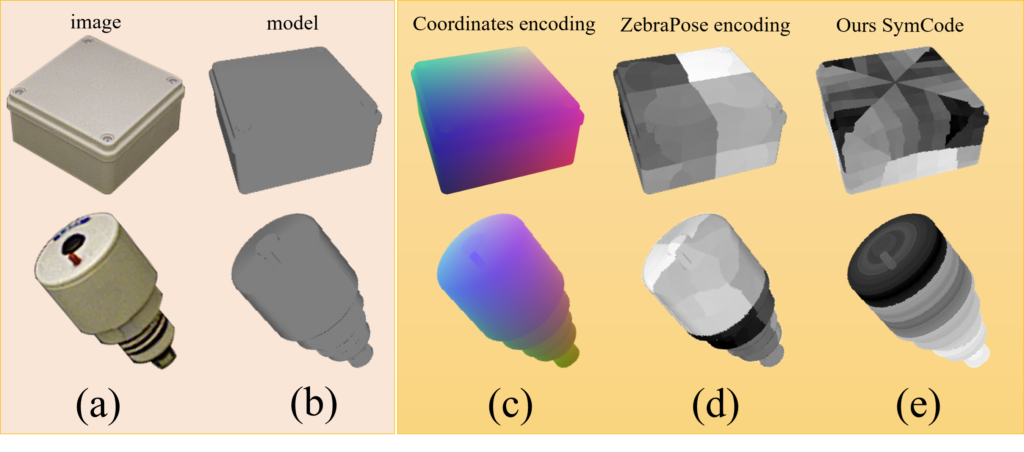

OpenCV Perception Challenge – Bin-Picking Track (7th place)

By: Sai Srinivas Jeevanandam, Jason Rambach

Info: https://bpc.opencv.org/

Outstanding CVPR Reviewer Award

Jason Rambach