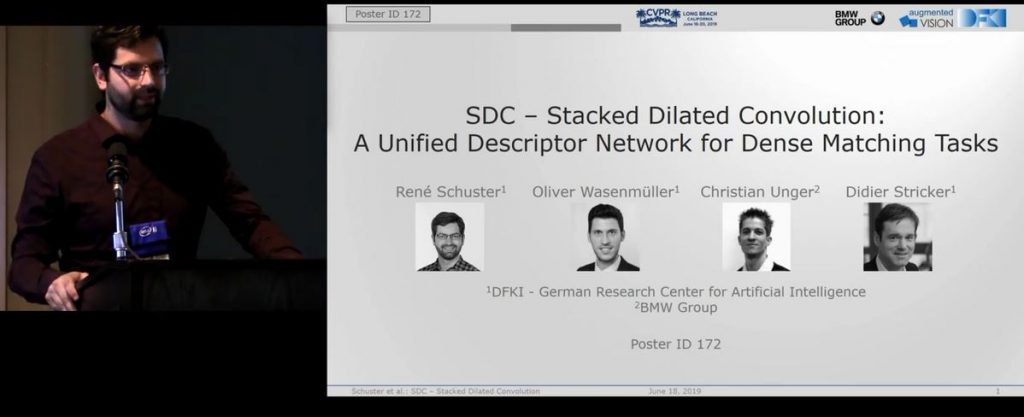

Jameel Malik successfully defended his PhD thesis entitled “Deep Learning-based 3D Hand Pose and Shape Estimation from a Single Depth Image: Methods, Datasets and Application” in the presence of the PhD committee made up of Prof. Dr. Didier Stricker (Technische Universitat Kaiserslautern), Prof. Dr. Karsten Berns (Technische Universitat Kaiserslautern), Prof. Dr. Antonis Argyros (University of Crete) and Prof. Dr. Sebastian Michel (Technische Universitat Kaiserslautern) on Wednesday, November 11th, 2020.

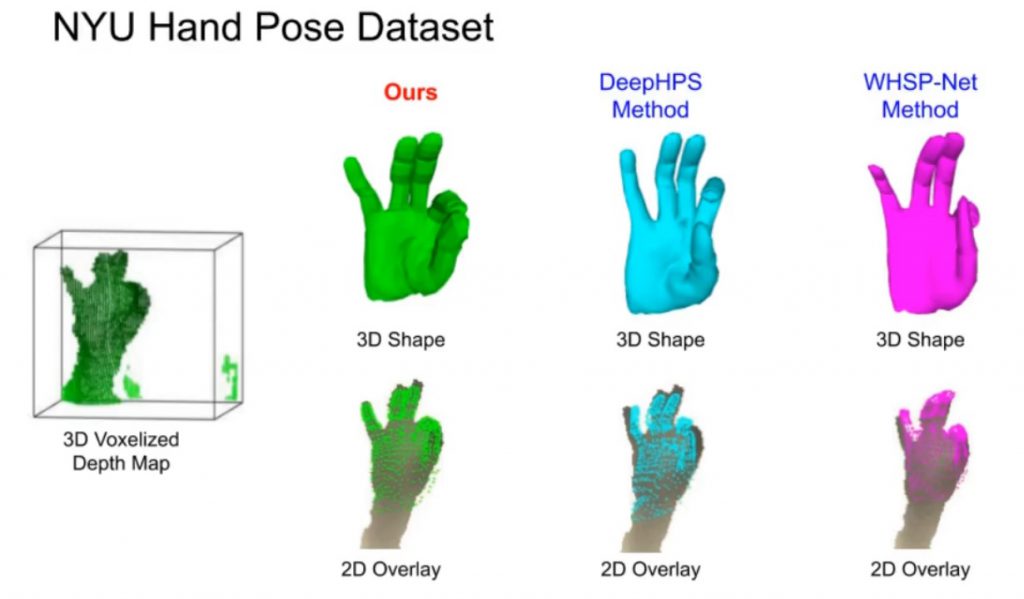

In his thesis, Jameel Malik addressed the unique challenges of 3D hand pose and shape estimation, and proposed several deep learning based methods that achieve the state-of-the-art accuracy on public benchmarks. His work focuses on developing an effective interlink between the hand pose and shape using deep neural networks. This interlink allows to improve the accuracy of both estimates. His recent paper on 3D convolution based hand pose and shape estimation network was accepted at the premier conference IEEE/CVF CVPR 2020.

Jameel Malik recieved his bachelors and master degrees in electrical engineering from University of Engineering and Technology (UET) and National University of Sciences and Technology (NUST) Pakistan, respectively. Since 2017, he has been working at the Augmented Vision (AV) group DFKI as a researcher. His research interests include computer vision and deep learning.

A week later, on Thurday, November 19th, 2020, Mr. Markus Miezal also successfully defended his PhD thesis entitled “Models, methods and error source investigation for real-time Kalman filter based inertial human body tracking” in front of the PhD committee consisting of Prof. Dr. Didier Stricker (TU Kaiserslautern and DFKI), Prof. Dr. Björn Eskofier (FAU Erlangen) and Prof. Dr. Karsten Berns (TU Kaiserslautern).

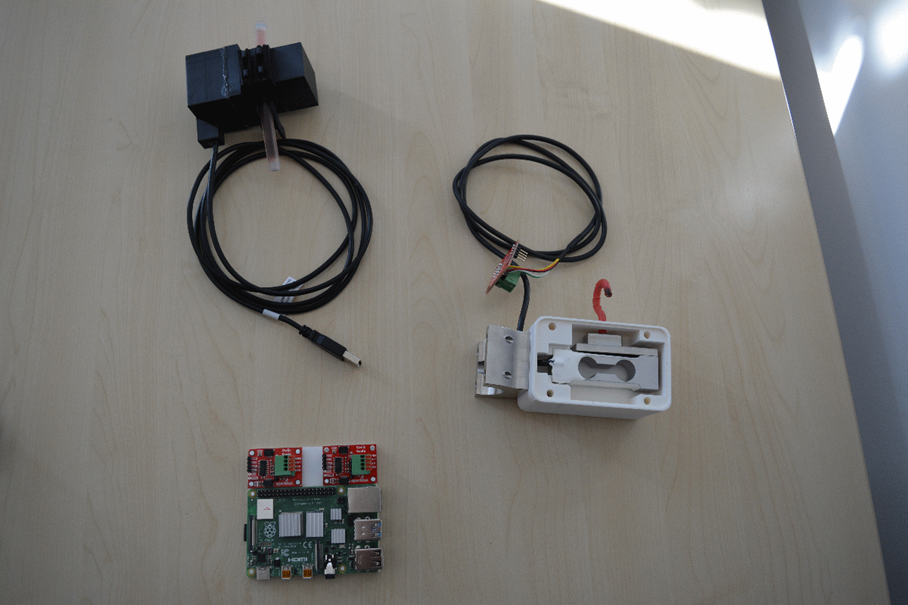

The goal of the thesis is to work towards a robust human body tracking system based on inertial sensors. In particular the identification and impact of different error sources on tracking quality are investigated. Finally, the thesis proposes a real-time, magnetometer-free approach for tracking the lower body with ground contact and translation information. Among the first author publications of the contributions, one can find a journal article in MDPI Sensors and a conference paper on the ICRA 2017.

In 2010, Markus Miezal received his diploma in computer science from the University of Bremen, Germany and started working at the Augmented Vision group at DFKI on visual-inertial sensor fusion and body tracking. In 2015, he followed Dr. Gabriele Bleser into the newly founded interdisciplinary research group wearHEALTH at the TU Kaiserslautern, where the research on body tracking continued, focussing on health related applications such as gait analysis. While finishing his PhD thesis, he co-founded the company sci-track GmbH as spin-off from TU KL and DFKI GmbH, which aims to transfer robust inertial human body tracking algorithms as middleware to industry partners. In the future Markus will continue research at university and support the company.