The possibility to digitize real objects is of major interest in various application domains, like for setting up a virtual museum, to establish virtual object repositories for digital worlds (e.g. games, Second Life), or for the conversion of handmade models into a digitally usable representation.

Target groups

The possibility to digitize real objects is of major interest in various application domains, like for setting up a virtual museum, to establish virtual object repositories for digital worlds (e.g. games, Second Life), or for the conversion of handmade models into a digitally usable representation.

OrcaM supports all these and many more application domains in providing excellent models of real world objects with an in-plane detail resolution in the submillimeter range. The resulting models are tuned for Web-applications, i.e. they provide a very low polygonal count (in the range of 20k triangles for a “standard” object). Detail information is encoded in respective normal- and displacement maps.

Method

The objects are placed on a glass plate and digitized. The process of digitization is based on the structured-light principle, i.e. dedicated light patterns are projected onto the object while the object is photographed from different angles using multiple cameras. Based on these images the shape (geometry) of the object can be acquired contact-less. Currently the images taken during the acquisition are 16M pixel RGB images with consumer-grade quality. In near future the cameras will be replaced by monochrome cameras to further enhance acquisition speed and -resolution as well as to prevent demosaicing (de-bayering) artifacts.

Additional images taken without the projector’s light pattern but under diffuse and directional lighting are used to reconstruct the color as well as the illumination behavior (appearance) of the object. Using OrcaM objects with a diameter of up to 80cm and a weight of up to 100kg can be reconstructed.

Special Features

OrcaM is designed to reconstruct whole objects in a single pass. Therefore it is necessary to also acquire the objects from below. To achieve this the object is placed on a rotate-able and height adjustable glass carrier. The fringes as well as the LEDs illuminate the objects through the glass plate. Also the images are taken through the carrier. The inevitable reflections on the glass and the distortions introduced by taking pictures through the plate are automatically compensated. Spurious points due to interreflections and/or sensor noise are automatically identified and removed. More information on this topic will be provided soon in “Robust Outlier Removal from Point Clouds Acquired with Structured Light” J. Köhler et.al. (accepted at Eurographics).

Another important feature of OrcaM is the reconstruction of very dark and light absorbing materials where outstanding results can be achieved. As an example see image “sports shoe” depicting various views of a worn shoe reconstruction. The shoe has been acquired in a single pass from all directions. The sole was placed directly on the glass plate. Please note that even at the material boundaries the reconstruction is nearly perfect. In the middle of the sole (near the bottom of the image) a transparent gel cushin could not be reconstructed, but note that the material underneath said cushin is reconstructed with very little noise.

-

-

OrcaM Sphere

-

-

Reconstruction examples

-

-

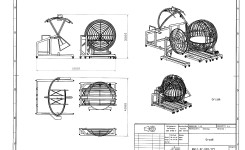

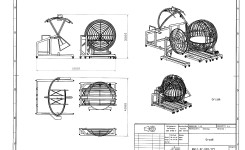

Technical drawing

-

-

Sports shoe

-

-

Geometry example

-

-

Photograph and 3D object resolution

-

-

Paper towel; (left) texture only, (right) normals

Aim

Aim of OrcaM is the automated transition of real world objects into high quality digital representations, enabling their use in the Internet, movies, images, computer games, and many more digital applications. Especially it enables their recreation using modern 3D-printer technologies, milling mashines and the like. Hence, especially in the domain of culural heritage (but also in other very different domains) it becomes possible to provide the interested audience with 3D-objects either as an interactive visualization or as a real object. Note, OrcaM is currently only concerned with the digitalization and not the recreation.

Naturally questions with respect to resolution arise. The generally put question “what resolution does OrcaM provide” is a little bit more involved. Assume the current setting with 16M pixels per image. OrcaM supports objects with a diameter of up to 80cm. The cameras are set up such that the objects fill almost the complete image. Hence a single pixel covers approximately 0.04mm², or in other words 25 pixels are aquired per square millimeter. Of course the cameras are adjusted for smaller objects to also cover the complete image. Hence, said resolution is the lowest resolution currently provided, whereas objects with half the diameter can be acquired with approximately four times the resolution.

Furthermore, at every pose of the camera head and the glass plate (the number of different head and carrier poses depends mainly on the objects geometry) not only a single camera acquires information but seven cameras are used, summing up to the acquisition of approximately 175 pixels per square millimeter. Since we acquire each point on the object with multiple poses, the number of samples per pixel is further raised, but note that some of this information is quasi redundant, hence it is mainly used to minimize noise.

For most state of the art systems the above only holds with respect to the image plane, whereas the so called depth-resolution heavily depends on computational accuracy. Even worse, the depth resolution is position and view-dependant. In order to become independent from the actual setting and to provide a good depth resolution, special satellite cameras were introduced constraining the depth uncertainty to be comparably low as the xy-uncertainty. Although not completely valid in theory, in practice the depth resolution can be assumed as being equally high than the xy-resolution.

Another important point to note with respect to resolution is that the system has two more or less independent resolution limits. The first applies to so called “in-plane” geometry. Here in-plane refers to structures with the main extent parallel to the objects local surface and very small extent normal to the local surface. This resolution is extremely high (sub-millimeter) as can be found in the video of Lehmbruck’s “Female Torso” where the tiny mold grooves are reliably reconstructed. Another example can be found in the image “paper towel” where all individual imprints were successfully acquired. Note that the imprints are not captured in the texture image which is purely white but only in the normal-map.

The second type of resolution is the so called “out-of-plane” resolution. Here the main extent of a feature is normal to the objects local surface. In this case it is far more involved to correctly validate surface-point candidates for very tiny structures, instead of classifying them as noise. Although our basic acquisition resolution is the same as in the first case, such structures will be rejected during the reconstruction when they are less than say 2mm wide. Current research is aiming at the compensation of this effect.

Formats

Equally interesting as the resolution is the data format of the digital models. Here several formats can be provided. Currently a triangle mesh, a texture map and a normal map is computed. The object itself is represented as a triangle mesh. This can be provided in standard formats like OBJ, PLY, STL, etc., which can easily be converted into virtually any format. Additionally to the pure vertex data, a vertex color, the vertex normal and vertex bi-normal can be provided. The latter, however, is not stored with every format. In general the meshes are reduced in resolution to about 20k triangles to enable their use in Web applications. However, there is absolutely no need in doing so, hence the meshes can be generated in their original resolution comprising something around 10 million triangles.

Currently maps with 16M pixels are generated (4k POT), but higher resolutions can be generated on demand. Please note that the packing of the textures is up to 100% (in the mean case 10%) denser than with former state of the art texture packing algorithms. See Nöll Efficient Packing … for detail information. It is important to know that details are encoded in such maps in order to conserve that information for visualization purposes or for the recreation of geometry using displacement mapping. The textures are generated as uncompressed PNG RGB images, and can be converted into most other formats without much effort. Normals are computed in tangent space as well as object space.

Limitations

As with many other acquisition devices it is currently not possible to acquire transparent materials. Also highly reflective materials pose significant challenges. Partial solutions can be used to remedy the situation and research is aiming at a general solution to this challenge. First results acquiring car finish and brushed / polished metal are very promising. Another important limitation is that the system needs to photograph an individual point from multiple view positions. This poses significant challenges for highly convex parts of an object due to occlusion. We are working on a reconfigurable camera head that will be able to optimize camera positions with respect to the object geometry.

Results

Preliminary results of OrcaM reconstructions can be found here.