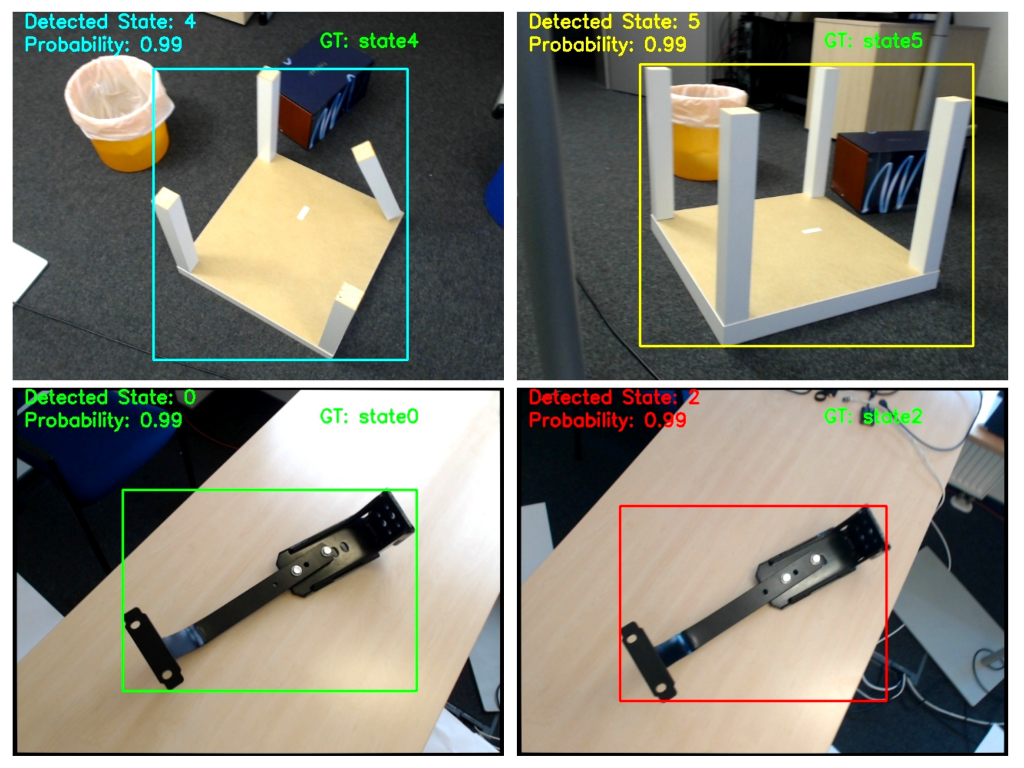

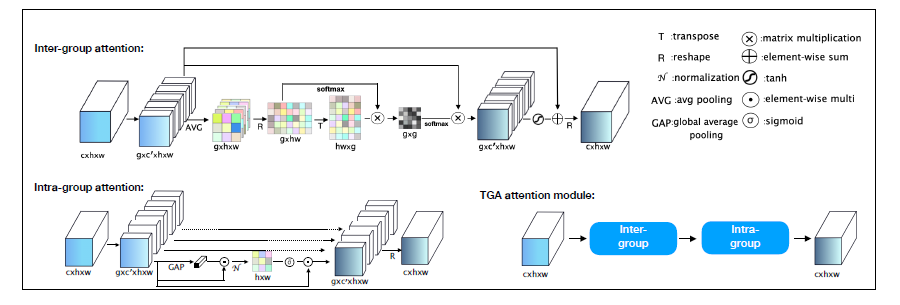

The Project ENNOS integrates color and depth cameras with the capabilities of deep neural networks on a compact FPGA-based platform to create a flexible and powerful optical system with a wide range of applications in production contexts. While FPGAs offer the flexibility to adapt the system to different tasks, they also constrain the size and complexity of the neural networks. The challenge is to transform the large and complex structure of modern neural networks into a small and compact FPGA architecture. To showcase the capabilities of the ENNOS concept three scenarios have been selected. The first scenario covers the automatic anonymization of people during remote diagnosis, the second one addresses semantic 3D scene segmentation for robotic applications and the third one features an assistance system for model identification and stocktaking in large facilities.

During the milestone review a prototype of the ENNOS camera could be presented. It integrates color and depth camera as well as an FPGA for the execution of neural networks in the device. Furthermore, solutions for the three scenarios could be demonstrated successfully with one prototype already running entirely on the ENNOS platform. This demonstrates that the project is on track to achieve its goals and validates the fundamental approach and concept of the project.

Project Partners:

Robert Bosch GmbH

Deutsches Forschungszentrum für Künstliche Intelligenz GmbH (DFKI)

KSB SE & Co. KGaA

ioxp GmbH

ifm eletronic GmbH*

PMD Technologies AG*

*Associated Partner

Contact: Stephan Krauß

Click here to visit our project page.