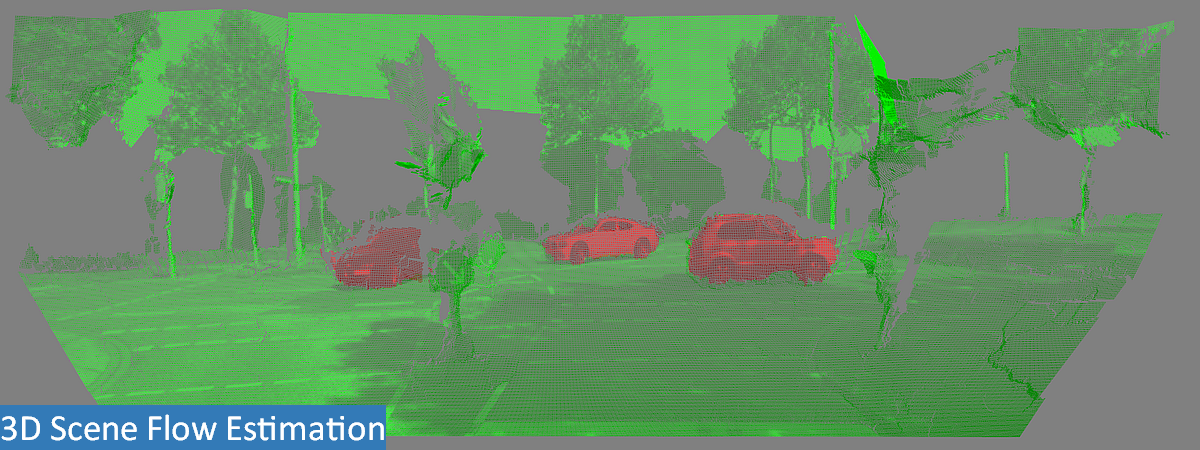

The team Automotive Scene Understanding (ASU) pioneers visual perception advancements for assisted and automated driving. Specializing in diverse perception tasks, the team excels in multi-modal and heterogeneous sensor fusion, enhancing system robustness. Their research spotlights compact networks leveraging domain knowledge for efficient data processing.

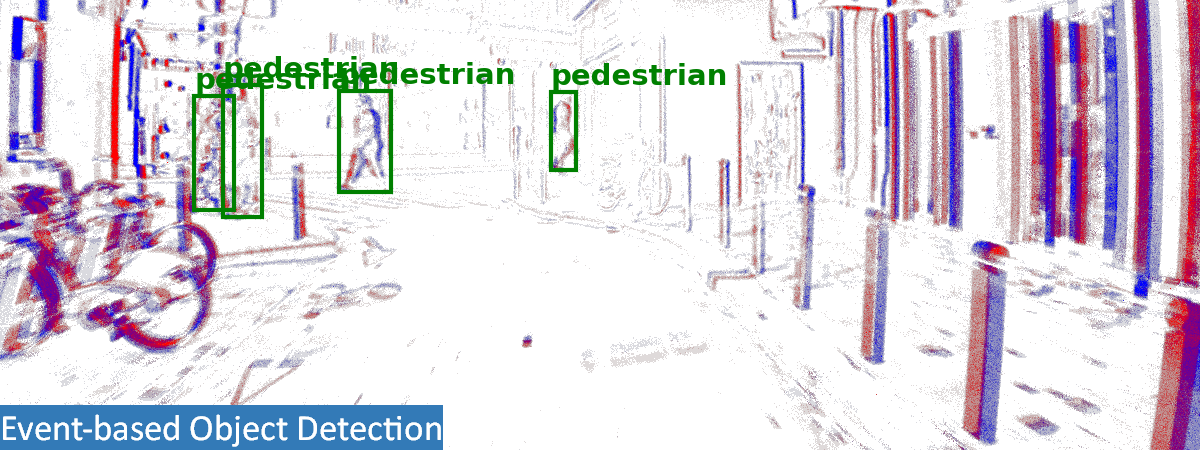

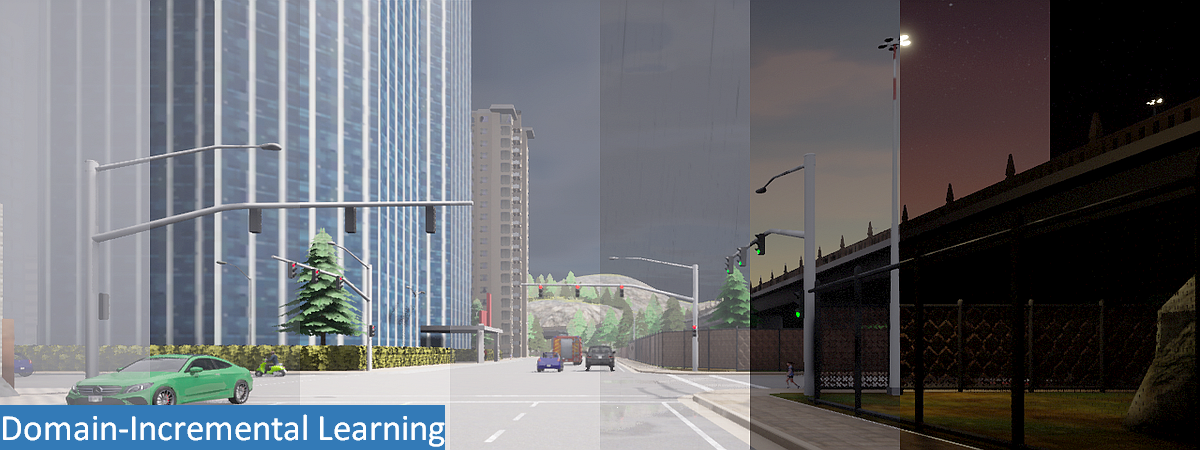

A significant focus lies in data-efficient learning, aiming to optimize models with limited training data. The team is actively involved in continual learning, ensuring adaptability to evolving scenarios over time. Additionally, they explore neuromorphic computing principles, inspired by the human brain's architecture, to enhance system efficiency and adaptability.