We are happy to announce that our article “OPA-3D: Occlusion-Aware Pixel-Wise Aggregation for Monocular 3D Object Detection” was published in the prestigious IEEE Robotics and Automation Letters (RA-L) Journal. The work is a collaboration of DFKI with the TU Munich and Google. The article is openly accessible at: https://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=10021668

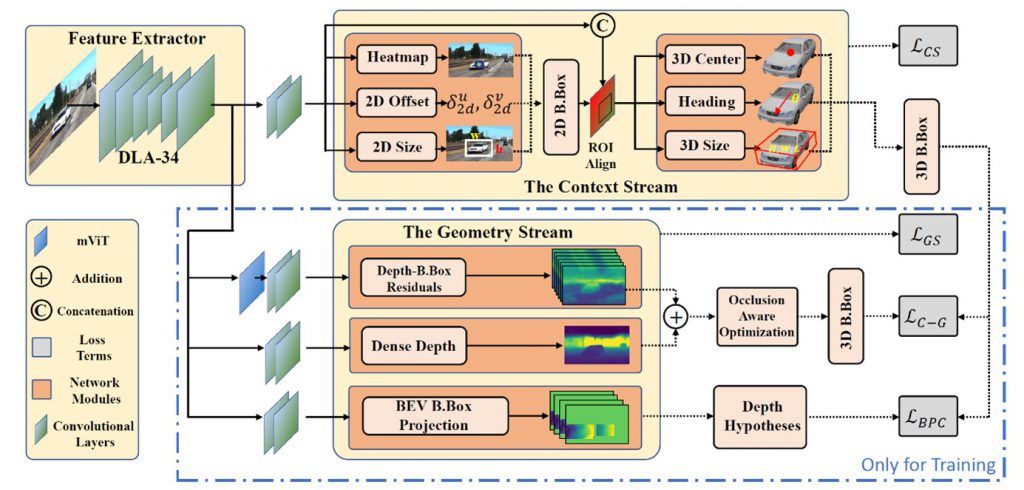

Abstract: Monocular 3D object detection has recently made a significant leap forward thanks to the use of pre-trained depth estimators for pseudo-LiDAR recovery. Yet, such two-stage methods typically suffer from overfitting and are incapable of explicitly encapsulating the geometric relation between depth and object bounding box. To overcome this limitation, we instead propose to jointly estimate dense scene depth with depth-bounding box residuals and object bounding boxes, allowing a two-stream detection of 3D objects that harnesses both geometry and context information. Thereby, the geometry stream combines visible depth and depth-bounding box residuals to recover the object bounding box via explicit occlusion-aware optimization. In addition, a bounding box based geometry projection scheme is employed in an effort to enhance distance perception. The second stream, named as the Context Stream, directly regresses 3D object location and size. This novel two-stream representation enables us to enforce cross-stream consistency terms, which aligns the outputs of both streams, and further improves the overall performance. Extensive experiments on the public benchmark demonstrate that OPA-3D outperforms state-of-the-art methods on the main Car category, whilst keeping a real-time inference speed.

Yongzhi Su, Yan Di, Guangyao Zhai, Fabian Manhardt, Jason Rambach, Benjamin Busam, Didier Stricker and Federico Tombari “OPA-3D: Occlusion-Aware Pixel-Wise Aggregation for Monocular 3D Object Detection.” IEEE Robotics and Automation Letters (2023).

Contacts: Yongzhi Su, Dr. Jason Rambach