Fusion multimodaler optischer Sensoren zur 3D Bewegungserfassung in dichten, dynamischen Szenen für mobile, autonome Systeme

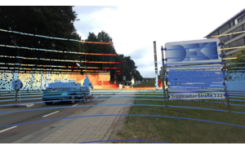

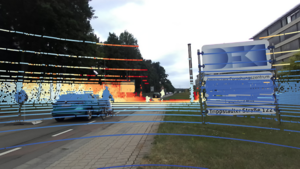

Autonomous vehicles will be an indispensable component of future mobility systems. Autonomous vehicles can significantly increase the safety of driving while simultaneously increasing traffic density. Autonomously operating vehicles must be able to continuously and accurately detect their environment and the movements of other road users. To this end, new types of real-time capable sensor systems must be researched. Cameras and laser scanners operate according to different principles and offer different advantages in capturing the environment. The aim of this project is to investigate whether and how the two sensor systems can be combined to reliably detect movements in traffic in real time. The challenge in this case is to suitably combine the heterogeneous data of both systems and to find suitable representations for the geometric and visual features of a traffic scene. These must be optimized to the extent that reliable information can be provided for vehicle control in real time. If such a hybrid sensor system can be designed and successfully built, this could represent a breakthrough for sensor equipment for autonomous vehicles and a decisive step for the implementation of this technology.

Contact

- Ramy.Battrawy@dfki.de

- Phone: +49 631 20575 3690