Magnetometer-free Inertial Motion Capture System with Visual Odometry

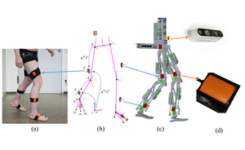

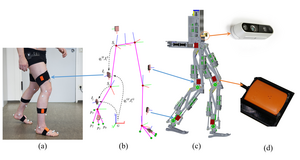

IMCV project proposes a wearable sensory system, based on inertial motion capture device and visual odometry that can easily be mounted on a robot, as well as on the humans and delivers 3D kinematics in all the environments with an additional 3D reconstruction of the surroundings.

Its objective is to develop this platform for both Exoskeleton and bipedal robot benchmarking.

And it will develop a scenario-generic sensory system for human and bipedal robots and therefore two benchmarking platform will be delivered to be integrated into Eurobench facilities in Spain and Italy for validation tests.

It is planned to use recent advances in inertial measurement units based 3D kinematics estimation that does not use magnetometers and, henceforth, is robust against magnetic interferences induced by the environment or the robot.

This allows a drift-free 3D joint angle estimation of e.g. a lower body configuration or a robotic leg in a body-attached coordinate system.

To map the environment and to correct for possible global heading drift (relative to an external coordinate frame) of the magnetometer-free IMU system, it is planned to fuse the visual odometry stochastically with the IMU system. The recorded 3D point cloud of the stereo camera is used in the post-processing phase to generate the 3D reconstruction of the environment. Therefore a magnetometer-free wearable motion capture system with approximate environment mapping should be created that works for humans and bipedal robots, in any environment, i.e. indoors and outdoors.

To improve localization and measure gait events, a wireless foot pressure insoles will be integrated for measuring ground interaction. Together with the foot insole all the necessary data to reconstruct kinetics and kinematics will be delivered and fully integrated into Robot Operating System (ROS). A user interface will be developed for possible modifications of the skeleton. We also provide validation recordings with a compliant robot leg and with humans, including the computation of key gait-parameters.

Partner

Technische Universität Kaiserslautern, Dekanat Informatik