Our paper with the title “HandVoxNet: Deep Voxel-Based Network for 3D Hand Shape and Pose Estimation from a Single Depth Map” has been accepted for publication at the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2020 (CVPR 2020) which will take place from June 14th to 19th, 2020 in Seattle, Washington, USA. It is the “premier” conference in the field of Computer Vision. Our paper was accepted from 6656 submissions as one of 1470 (acceptance rate: 22 %).

Abstract

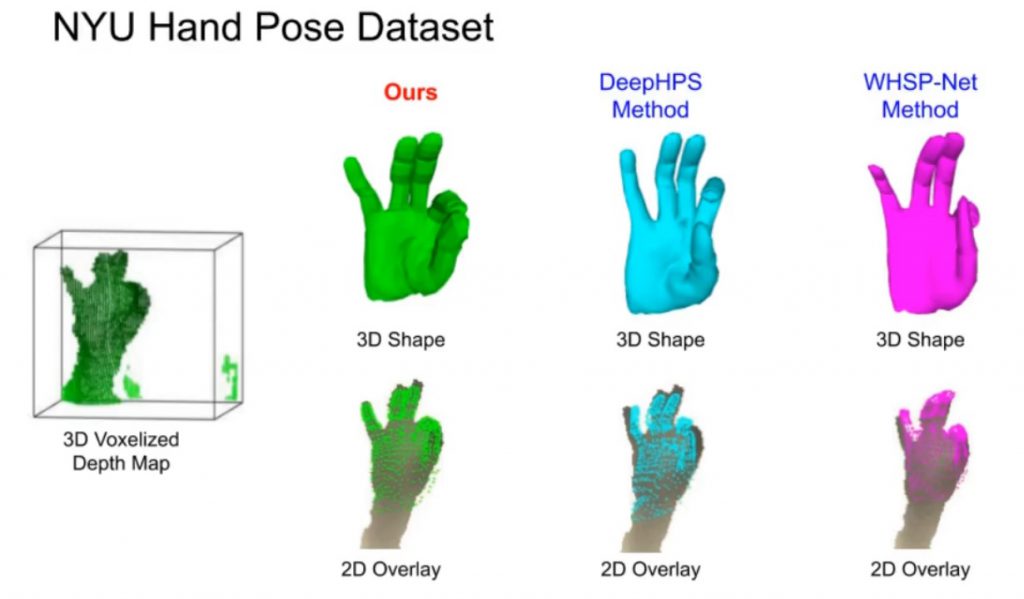

We propose a novel architecture with 3D convolutions for simultaneous 3D hand shape and pose estimation trained in a weakly-supervised manner. The input to our architecture is a 3D voxelized depth map. For shape estimation, our architecture produces two different hand shape representations. The first is the 3D voxelized grid of the shape which is accurate but does not preserve the mesh topology and the number of mesh vertices. The second representation is the 3D hand surface which is less accurate but does not suffer from the limitations of the first representation. To combine the advantages of these two representations, we register the hand surface to the voxelized hand shape. In extensive experiments, the proposed approach improves over the state-of-the-art for hand shape estimation on the SynHand5M dataset by 47.8%. Moreover, our 3D data augmentation on voxelized depth maps allows to further improve the accuracy of 3D hand pose estimation on real datasets. Our method produces visually more reasonable and realistic hand shapes of NYU and BigHand2.2M datasets compared to the existing approaches.

Please find our paper here.

Authors

Muhammad Jameel Nawaz Malik, Ibrahim Abdelaziz, Ahmed Elhayek, Soshi Shimada, Sk Aziz Ali, Vladislav Golyanik, Christian Theobalt, Didier Stricker

Please also check out our video on YouTube.

Please contact Didier Stricker for more information.