Fluently – the essence of human-robot interaction

Fluently leverages the latest advancements in AI-driven decision-making process to achieve true social collaboration between humans and machines while matching extremely dynamic manufacturing contexts. The Fluently Smart Interface unit features: 1) interpretation of speech content, speech tone and gestures, automatically translated into robot instructions, making industrial robots accessible to any skill profile; 2) assessment of the operator’s state through a dedicated sensors’ infrastructure that complements a persistent context awareness to enrich an AI-based behavioural framework in charge of triggering the generation of specific robot strategies; 3) modelling products and production changes in a way they could be recognized, interpreted and matched by robots in cooperation with humans. Robots equipped with Fluently will constantly embrace humans’ physical and cognitive loads, but will also learn and build experience with their human teammates to establish a manufacturing practise relying upon quality and wellbeing.

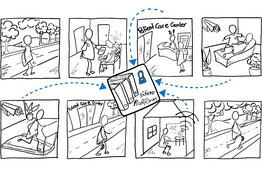

FLUENTLY targets three large scale industrial value chains playing an instrumental role in the present andfuture manufacturing industry in Europe, that are: 1) lithium cell batteries dismantling and recycling (fullymanual); 2) inspection and repairing of aerospace engines (partially automated); 3) laser-based multi-techs forcomplex metal components manufacturing, from joining and cutting to additive manufacturing and surfacefunctionalization (fully automated in the equipment but strongly dependent upon human process assessment).

Partners

- REPLY DEUTSCHLAND SE (Reply), Germany,

- STMICROELECTRONICS SRL (STM), Italy,

- BIT & BRAIN TECHNOLOGIES SL (BBR), Spain,

- MORPHICA SOCIETA A RESPONSABILITA LIMITATA (MOR), Italy,

- IRIS SRL (IRIS), Italy,

- SYSTHMATA YPOLOGISTIKIS ORASHS IRIDA LABS AE (IRIDA), Greece,

- GLEECHI AB (GLE), Sweden,

- FORENINGEN ODENSE ROBOTICS (ODE), Denmark,

- TRANSITION TECHNOLOGIES PSC SPOLKA AKCYJNA (TT), Poland,

- MALTA ELECTROMOBILITY MANUFACTURING LIMITED (MEM), Malta,

- POLITECNICO DI TORINO (POLITO), Italy,

- DEUTSCHES FORSCHUNGSZENTRUM FUR KUNSTLICHE INTELLIGENZ GMBH (DFKI), Germany,

- TECHNISCHE UNIVERSITEIT EINDHOVEN (TUe), Netherlands,

- SYDDANSK UNIVERSITET (SDU), Denmark,

- COMPETENCE INDUSTRY MANUFACTURING 40 SCARL (CIM), Italy,

- PRIMA ADDITIVE SRL (PA), Italy,

- SCUOLA UNIVERSITARIA PROFESSIONALE DELLA SVIZZERA ITALIANA (SUPSI), Switzerland,

- MCH-TRONICS SAGL (MCH),Switzerland,

- FANUC SWITZERLAND GMBH (FANUC Europe), Switzerland,

- UNIVERSITY OF BATH (UBAH), United Kingdom

- WASEDA UNIVERSITY (WUT), Japan

Contact

Dipl.-Inf. Bernd Kiefer